Subtitle: Going to ridiculous lengths to understand what doesn’t work with PhotoScan.

I took two sample videos with the GoPro a few days ago, of Dan and Rider. I want to print a color 3D model of them (shapeways, small), just to see it done, and to have a simple process to do it. But it keeps not quite working, and its annoying me. So, here goes another night of experimentation. What am I missing?

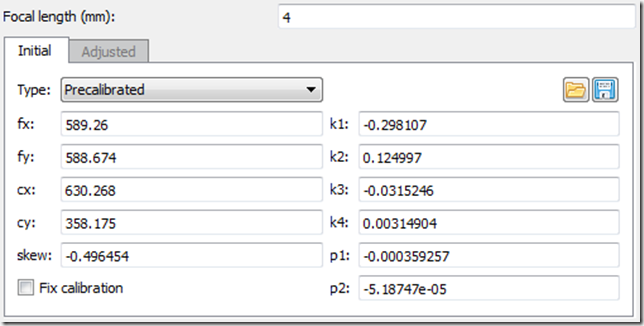

Here’s the precalibration stuff from Agisoft Lens, btw:

Check #1. How much does it matter how close or how far apart the frames are?

I extracted out at 60fps (the video is 120fps), so I have 1400 frames in a circle. That’s a lot of frames.

I extracted out at 60fps (the video is 120fps), so I have 1400 frames in a circle. That’s a lot of frames.

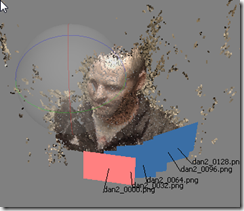

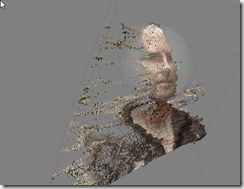

Here are sample reconstructions using just two frames – varying the number of frames apart. I’m using 4mm as the focal length, but I will play with that in the next section. Process: Align on High, Dense cloud on High. The picture on the right is what Frame # 0 looks like; the screen capture is “look through Frame 0”, zoom out to bring the head in frame, and rotate left (model’s right) about 45 degrees.

Clearly, more pictures is not the answer. The best one was 0 to 32, which was about a 6 degree difference.

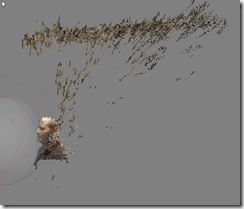

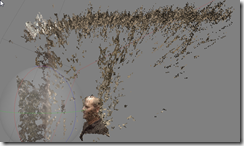

Check #2: Trying every 32 frames, how does adding more pictures improve quality?

This time I’m moving the camera up so I can see the “jaggies” around the edges

3 Frames combined (0,32,64):  |

4 frames combined:  |

6 frames combined:  |

7 frames combined:  |

The same 7 frames, this time with the wall in view, trying to line up the roof and the wall:

Check #3: Focal Length

Trying to solve for the wall jagginess.

2mm: |

4mm: |

6mm: |

8mm: Cannot build dense cloud |

5mm: |

|

3mm: |

4.5: |

Okay, so .. 4.5 is wonky, but 4 and 5 are okay? Its very hard from this angle to see any difference in quality between 3,4,5 and 6. 2, 7, and 8 are clearly out**

Maybe another angle:

3mm: |

4mm: |

5mm: |

6mm: |

** Or maybe 7 is not quite out yet. Turns out, I can “align photos” once.. get one result.. then try aligning again .. and get a different result. So I retried 8 a couple of times over, and I got this:

None of this is making any sense to me. I guess I’ll stick with 4mm, for lack of a better idea. Do you see any patterns in this? Moving on.

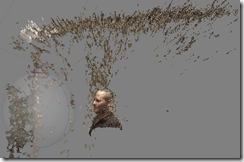

Check #4: Low, Medium, High Accuracy?

I’ve bumped it up to 17 cameras (32 frames apart). Testing for “Align Photos” accuracy (Low, Medium, High) + Dense Cloud accuracy + Depth Filtering

Aggressive is definitely the way to go; however, there are still way too many floaters!

Ah, but this image might clear that up a bit. It has to do with the velocity with which I was moving the camera. I slowed down. Hence several of the frames are not very far apart. I might need a different approach for frame selection.

Test #5: Compass Points Approach

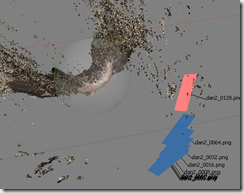

I will attempt to bisect angles and derive frames in that manner. Note that I’m not going to try the full 360 – I suspect that the subject moves a bit, so it can’t connect 359 back to 0; instead, I’m hoping to get a nice 90 degree profile, and maybe merge chunks to get it down to a single model. So lets try to get a set of frames from the image on the Left (000) to the image on the Right (400).

I will attempt to bisect angles and derive frames in that manner. Note that I’m not going to try the full 360 – I suspect that the subject moves a bit, so it can’t connect 359 back to 0; instead, I’m hoping to get a nice 90 degree profile, and maybe merge chunks to get it down to a single model. So lets try to get a set of frames from the image on the Left (000) to the image on the Right (400).

- 0,200,400 – Aligns 2/3

- 0,100,200,300,400 – Aligns 5/5, but fails to build dense cloud

- 0,50,100,…,350,400:

I have to cut this blog post short here – it looks like I have WAY too many images, and Live Writer is freaking out. Doing a quick edit past, and then posting this as a part 1/N.

Dense Cloud refused to build multiple times on my laptop until I uncheck the ATI video card under the preference tab for Open GL. Turns out that this program does not play well with Open GL on my ATI card, which is not a surprise at it appears to have been designed with recommended Nvidia support. After disabling this, I was able to build a dense point cloud.

Your Hero3 should be 2.7mm. Download their free software to calibrate your camera lens and it will tell you the mm and also detect all distortion. The camera lens profile can be loaded in Agisoft photo.

Finally, the sparse cloud always looks like junk until you render in dense cloud. After aligning photos, you can click on marker and track the error between photos in case there is some issue. My guess as a new user of this software is that your lens guess are way off and so don’t expect good point clouds.

Also for capture, try a white background or white photo booth so that only the subject is in frame.

Good Luck

Jerome, thank you for your feedback! Yes, I gave up on the Hero3, had much better results with a (borrowed) DSLR. I will retry the Hero3 with 2.7mm — I did try to calibrate it with Agisoft Lens, but even so, I had bad results – mostly I think because its such a wide angle, that the resolution that each pixel projects to is huge.

On the Hero3, make sure to capture at medium size (6MP on Hero 3black) or possibly 5MP (Hero3 Silver) as distortion from wide or super wide modes is going to be horrible. Also, you can mask areas in the the photo that you don’t want to process, but I had not messed with this much, finally your distance from lens combo may affect the ability to match, in other words, distortion in increases as you get closer and closer to your subject, although 3D information also can increase. (just my best guess) Be great if you can post the final output when you got it working 🙂

Don’t use fisheye.