Everybody seems to love Unit Tests

I agree, they are wonderful. I have lots of logic that is unit tested … and its easy to set up (especially with tools like moq)…

But its not what I rely on. I have found it to be too limited to give me the confidence I’m looking for as I write a system. I want as much tested as I can – including the data access layers – and how everything fits together – and that my dependency injectors are working correctly.

Another view: in my current project, I’m using nHibernate as the data provider. The general consensus on mocking nHibernate is: don’t do it. Instead, use an in-memory database (didn’t work – had to maintain different mapping files), or write an IRepository around it.

When I do that, what I find is most of the logic that needs testing is in the subtleties around my queries (LINQ and otherwise) – the rest is plumbing data from one representation to another. While unit testing that is valid, it does not cover the places where I find most of my failures. Stated in GWT syntax, my tests would be “GIVEN perfect data, WHEN logic is executed, THEN the app does the right thing” — “perfect data” being the elusive part.

I have tried providing a List<T>.AsQueryable() as a substitute data source in unit tests – and that works well, as long as my queries do not get complicated (involving NHibernate .Fetch and so on.) If the queries grew beyond my ability to mock them with .AsQueryable(); my “test” situation (LINQ against a list) started to differ significantly from the “real” situation (LINQ against a database) and I started to spend too much time getting the test just right, and no time on real code.

My Solution – Test Data Creators

My solution for the past 5 years over multiple projects has been “Integration Tests”, which engage the application from some layer (controller, presenter, etc) all the way down to the database.

“Integration”,”Unit”, and “Functional” tests — there seem to be a lot of meanings out there. For example, one boss’s idea of a “Unit” test was, whatever “Unit” a developer was working on, got tested. In that case, it happened to be the “Unit” of batch-importing data from a system using 5 command line executables. Thus, for this article only, I define:

- Unit Test – A test called via the nUnit framework (or similar) that runs code in one target class, using mocks for everything else called from that class, and does not touch a database or filesystem

- Integration Test – A test called via the nUnit frameowrk (or similar) that runs code in one target class, AND all of the components that it calls, including queries against a database or filesystem

- Functional Test – Something I haven’t done yet that isn’t one of the above two

- Turing Test – OutOfscopeException

Having built these several times for different projects, there are definite patterns that I have found that work well for me. This article is a summary of those patterns.

Pattern 1: Test Data Roots

For any set of data, there is a root record.

Sometimes, there are several.

In my current project, there is only one, and it is a “company”; in a previous project, it was a combination of “feed”, “company” and company.

The Pattern:

- Decide on a naming convention – usually, “TEST_”+HostName+”_”+TestName

- Verify that I’m connecting to a location where I can delete things with impunity — before I delete something horribly important (example: if connection.ConnectionString.Contains(“dev”))

- If my calculated test root element exists, delete it, along with all its children.

- Create the root and return it.

- Use IDisposable so that it looks good in a using statement, and any sessions/transactions can get closed appropriately.

Why:

- The HostNameallows me to run integration tests on a build server at the same time as a local machine, both pointed at a shared database.

- I delete at the start to leave behind test data after the test is run. Then I can query it manually to see what happened. It also leaves behind excellent demo material for demoing functionality to client and doing ad-hoc manual testing.

- The TestName allows me to differentiate between tests. Once I get up to 20-30 tests, I end up with a nice mix of data in the database, which is helpful when creating new systems – there is sample data to view.

Example:

using (var root = new ClientTestRoot(connection,"MyTest")) {

// root is created in here, and left behind.

// stuff that uses root is in here. looks good.

}

Pattern 2: Useful Contexts

Code Example:

using (var client = new ClientTestRoot(connection,"MyTest")) {

using (var personcontext = new PersonContext(connection, client)) {

// personcontext.Client

// personcontext.Person

// personcontext.Account

// personcontext.PersonSettings

}

}

I create a person context, which has several entities within it, with default versions of what I need.

I also sometimes provide a lambda along the lines of:

new PersonContext(connection, client, p=>{p.LastName="foo", p.Married=true})

to allow better customization of the underlying data.

I might chain these things together. For example, a Client test root gives a Person context gives a SimpleAccount context … or seperately, a MultipleAccount context.

Pattern 3: Method for Creating Test Data can be Different from What Application Uses

By historical example:

| Project |

Normal App Data Path |

Test Data Generation Strategy |

| Project 1 (2006) |

DAL generated by Codesmith |

OracleConnection, OracleCommand (by hand) |

| Project 2 (2007) |

DAL generated by Codesmith |

Generic Ado.Net using metadata from SELECT statement + naming conventions to derive INSERT + UPDATE from DataTable’s |

| Project 3 (2008) |

DAL generated by Codesmith |

DAL generated by Codesmith — in this case, we had been using it for so long, we trusted it, so we used it in both places |

| Project 4 (2010) |

Existing DAL + Business Objects |

Entity Framework 1 |

| Project 5 (2011) |

WCF + SqlConnection + SqlCommand + Stored Procedures |

No test data created! (see pattern 7 below) |

| Project 6 (2012) |

NHibernate with fancy mappings (References, HasMany, cleaned up column names) |

NHibernate with simple mappings – raw column names, no references, no HasMany, etc |

The test data creator will only be used by tests — not by the application itself. It maintains its own network connection. However you do it, get it up and running as quickly as you can – grow it as needed. Refactor it later. It does NOT need to be clean – any problems will come to light as you write tests with it.

Pattern 4: Deleting Test Data is Tricky Fun

The easiest way everybody seems to agree on is: drop database and reload. I’ve had the blessings to be able to do this exactly once, its not the norm for me – usually I deal with shared development databases, or complicated scenarios where I don’t even have access to the development database schema.

Thus, I have to delete data one table at a time, in order.

I have used various strategies to get this done:

- Writing SQL DELETE statements by hand — this is where I start.

- Putting ON DELETE CASCADE in as many places as it makes sense. For example, probably don’t want to delete all Employees when deleting a Company (how often do we delete a company! Are you sure?) but could certainly delete all User Preferences when deleting a User. Use common sense.

- Create a structure that represents how tables are related to other tables, and use that to generate the delete statements.

This is the hardest part of creating test data. It is the first place that breaks — somebody adds a new table, and now deleting fails because foreign keys are violated. (long term view: that’s a good thing!)

I got pretty good at writing statements like:

delete from c2

where c2.id in (

select c2.id from c2

join c1 on ...

join root on ....

where root.id = :id )

After writing 4-5 of them, you find the pattern.. the child of a C2 looks very similar to the delete query for C2, except with a little bit more added. All you need is some knowledge of where you delete first, and where you can go after that.

How Tables Relate

I no longer have access to the codebase, but as I remember, I wrote something like this:

var tables = new List();

var table1 = new TableDef("TABLE1","T1");

{

tables.Add(table1);

var table2 = table1.SubTable("TABLE2","T2","T1.id=T2.parentid");

{

tables.Add(table2);

// etc etc

}

// etc etc

}

tables.Reverse(); // so that child tables come before parent tables

I could then construct the DELETE statements using the TableDef’s above – the join strategy being the third parameter to the .SubTable() call.

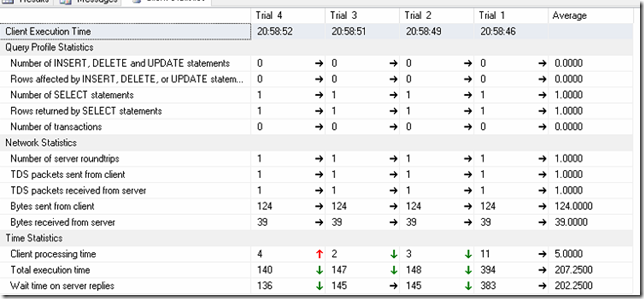

Slow Deletes

I ran into a VERY slow delete once, on Oracle. The reason was, the optimizer had decided that it was faster to do a rowscan of 500,000 elements than it was to do this 7-table-deep delete. I ended up rewriting it:

select x.ROWID(), ...; foreach ... { delete where rowid==... }

Moral(e): you will run into weird deletion problems. That’s okay, it goes with the territory.

Circular Dependencies

Given:

- Clients have People

- Feeds have Files For Multiple Clients

- Files Load People

- A loaded person has a link back to the File it came from

This led to a situation where if you tried to delete the client, the FK from Feed to Client prevented it. If you tried to delete the feed, the FK from People back to File prevented it.

The solution was to NULL out one of the dependencies while deleting the root, to break the circular dependency. In this case, when deleting a Feed, I nulled the link from person to any file under the feed to be deleted. I also had to do the deletes in order: Feed first, then Client.

Example:

Here’s some real code from my current project, with table names changed to protect my client:

var exists =

(from c in session.Query() where c.name == companyNameToLookFor select c).

FirstOrDefault();

if (exists != null)

{

using (var tran = session.BeginTransaction())

{

// rule #1: only those things which are roots need delete cascade

// rule #2: don't try to NH it, directly delete through session.Connection

// ownercompany -> DELETE CASCADE -> sites

// sites -> manual -> client

// client -> RESTRICT -> feed

// client -> RESTRICT -> pendingfiles

// client -> RESTRICT -> queue

// queue -> RESTRICT -> logicalfile

// logicalfile -> CASCADE -> physicalfile

// logicalfile -> CASCADE -> logicalrecord

// logicalrecord -> CASCADE -> updaterecord

var c = GetConnection(session);

c.ExecuteNonQuery(@"

delete from queues.logicalfile

where queue_id in (

select Q.queue_id

from queues.queue Q

join files.client CM ON Q.clientid = CM.clientid

join meta.sites LCO on CM.clientid = LCO.bldid

where LCO.companyid=:p0

)

", new NpgsqlParameter("p0", exists.id));

c.ExecuteNonQuery(@"

delete from queues.queue

where clientid in (

select bldid

from meta.sites

where companyid=:p0

)

", new NpgsqlParameter("p0",exists.id));

c.ExecuteNonQuery(@"

delete from files.pendingfiles

where of_clientnumber in (

select bldid

from meta.sites

where companyid=:p0

) ",

new NpgsqlParameter(":p0", exists.id));

c.ExecuteNonQuery(@"

delete from files.feed

where fm_clientid in (

select bldid

from meta.sites

where companyid=:p0

) ",

new NpgsqlParameter(":p0",exists.id));

c.ExecuteNonQuery(@"

delete from files.client

where clientid in (

select bldid

from meta.sites

where companyid=:p0

) ",

new NpgsqlParameter(":p0", exists.id));

session.Delete(exists);

tran.Commit();

}

}

In this case, ownercompany is the root. And almost everything else (a lot more than what’s in the comments) CASCADE DELETE’s from the tables I delete above.

I did not write this all at once! This came about slowly, as I kept writing additional tests that worked against additional things. Start small!

Pattern 5: Writing Integration Tests Is Fun!

Using a library like this, writing integration tests becomes a joy. For example, a test that only accounts which are open are seen:

Given("user with two accounts, one open and one closed");

{

var user = new UserContext(testClientRoot);

var account1 = new AccountContext(user,a=>{a.IsClosed=true, a.Name="Account1" });

var account2 = new AccountContext(user,a=>{a.IsClosed=false,a.Name="Account2" });

}

When("We visit the page");

{

var model = controller.Index(_dataService);

}

Then("Only the active account is seen");

{

Assert.AreEqual(1,model.Accounts.Count);

... (etc)

Detail("account found: {0}", model.Accounts[0]);

}

The GWT stuff above is for a different post, its an experiment around a way of generating documentation as to what should be happening.

When I run this test, the controller is running against a real data service.. which could go as far as calling stored procedures or a service or whatever.

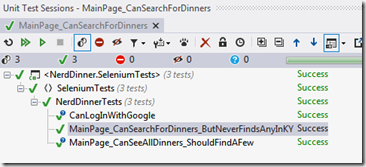

When this test passes, the green is a VERY STRONG green. There was a lot that had to go right for the test to succeed.

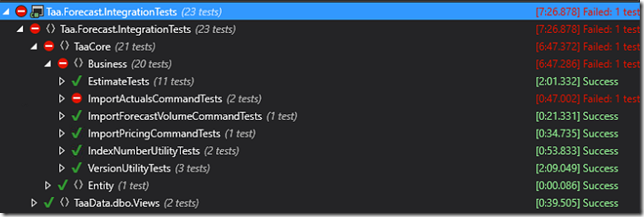

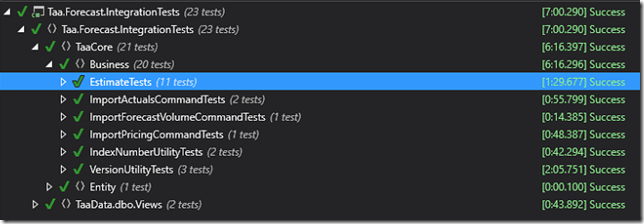

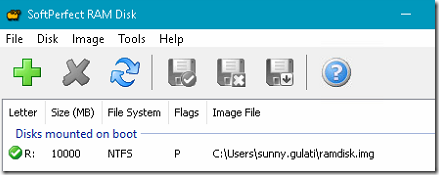

Pattern 6: Integration Tests Take Time To Iterate

Running unit tests – can easily run 300-500 in a few seconds. Developers run ALL tests fairly often. Integration tests, not so much.

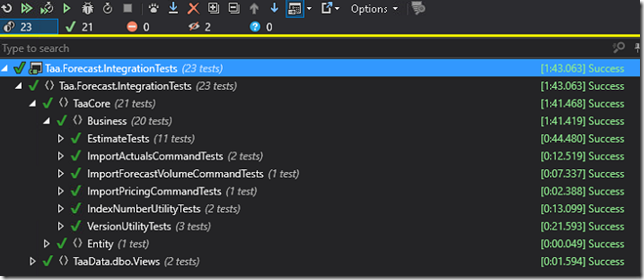

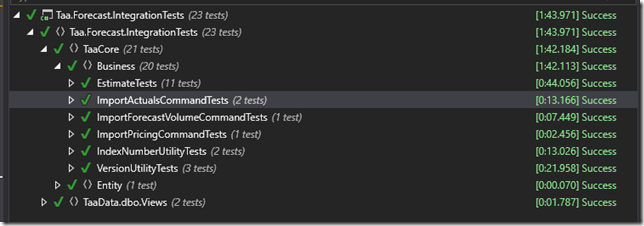

Solution: Use a CI server, like TeamCity, and run two builds:

- Continuous Integration Build – does the compile, and then runs unit tests on **/bin/*UnitTest.dll

- Integration Test Buid – if previous build is successful, then triggers – compiles – and runs unit tests on **/bin/*Test.dll

Ie, the Integration Test build runs a superset of tests – Integration tests AND Unit Tests both.

This also relies on naming convention for test dll’s – *UnitTests.dll being more restrictive than *Tests.dll.

There’s another approach I have used, where Integration Tests are marked with a category and Explicit() – so that local runs don’t run them, but the integration server includes them by category name. However, over time, I have migrated to keeping them in separate assemblies – so that the unit tests project does not have any references to any database libraries, keeping it “pure”.

When working on code, I usually run one integration test at a time, taking 3-4 seconds to run. When I’m done with that code, I’ll run all tests around that component.. maybe 30 seconds? Then, I check it in, and 4-5 minutes later, I know everything is green or not, thanks to the CI server. (AND, it worked on at least two computers – mine, and the CI server).

Pattern 7: Cannot Create; Search Instead

This was my previous project. Their databases had a lot of replication going on – no way to run that locally – and user and client creation was locked down. There was no “test root creation”, it got too complicated, and I didn’t have the privileges to do so even if I wanted to tackle the complexity.

No fear! I could still do integration testing – like this:

// Find myself some test stuff

var xxx = from .... where ... .FirstOrDefault();

if (xxx == null) Assert.Ignore("Cannot run -- need blah blah blah in DB");

// proceed with test

// undo what you did, possibly with fancy transactions

// or if its a read-only operation, that's even better.

The Assert.Ignore() paints the test yellow – with a little phrase, stating what needs to happen, before the test can become active.

I could also do a test like this:

[Test]

public void EveryKindOfDoritoIsHandled() {

var everyKindOfDorito = // query to get every combination

foreach (var kindOfDorito in everyKindOfDorito) {

var exampleDorito = ...... .FirstOrDefault();

// verify that complicated code for this specific Dorito works

}

}

Dorito’s being a replacement word for a business component that they had many different varieties of, with new ones being added all the time. As the other teams created new Doritos, if we didn’t have them covered (think select…case.. default: throw NotSupportedException()) our test would break, and we would know we had to add some code to our side of the fence. (to complete the picture: our code had to do with drawing pretty pictures of the “Dorito”. And yes, I was hungry when I wrote this paragraph the first time).

Interestingly, when we changed database environments (they routinely wiped out Dev integration after a release), all tests would go to Yellow/Ignore, then slowly start coming back as the variety of data got added to the system, as QA ran through its regression test suite.

Pattern 8: My Test Has been Green Forever.. Why did it Break Now?

Unit tests only break when code changes. Not so with Integration tests. They break when:

- The database is down

- Somebody updates the schema but not the tests

- Somebody modifies a stored procedure

- No apparent reason at all (hint: concurrency)

- Intermittent bug in the database server (hint: open support case)

- Somebody deleted an index (and the test hangs)These are good things. Given something like TeamCity, which can be scheduled to run whenever code is checked in and also every morning at 7am, I get a history of “when did it change” — because at some point it was working, then it wasn’t.

If I enable the integration tests to dump what they are doing to console – I can go back through Teamcity’s build logs and see what happened when it was last green, and what it looked like when it failed, and deduce what the change was.

The fun part is, if all the integration tests are running, the system is probably clear to demo. This reduces my stress significantly, come demo day.

Pattern 9: Testing File Systems

As I do a lot of batch processing work, I create temporary file systems as well. I utilize %TEMP% + “TEST” + testname, delete it thoroughly before recreating it, just like with databases.

In Conclusion

Perhaps I should rename this to “My Conclusion”. What I have found:

I love writing unit tests where it makes sense – a component, which has complicated circuitry, which can use a test around that circuitry.

I love even more writing integration tests over the entire system – One simple test like: “CompletelyProcessExampleFile1” tells me at a glance that everything that needs to be in place for the REAL WORLD Example File 1 to be processed, is working.

It takes time.

Its definitely worth it (to me).

Its infinitely more worth it if you do a second project against the same database.

May this information be useful to you.

Mr. Pink was not harmed during this process. He can definitely say that he wasn’t because he knows when he was and when he wasn’t. But he cannot definitely say that about anybody else, because he doesn’t definitely know.

Mr. Pink was not harmed during this process. He can definitely say that he wasn’t because he knows when he was and when he wasn’t. But he cannot definitely say that about anybody else, because he doesn’t definitely know.