I am now several days into this experiment. Its not working quite as I had hoped, but it is working. So here’s a roadmap/dump, with links along the way:

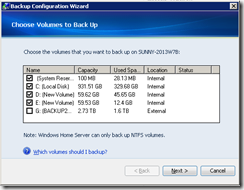

Headless Server Install

I had a HP Mediasmart EX-485 Home Server that went unresponsive. Did not appear to be a drive failure, the O/S started hanging. After rescuing everything, I had this pile of 4 hard drives sitting around – 750G, 1TB, 1.5TB, 2TB – and I was wondering what I could do with them. Well, build a server of course! Hey.. I have this hardware sitting around.. it used to be a Mediasmart EX-485 Home Server… But it doesn’t have a display, or keyboard, or mouse. There are places you can order some add-on hardware to do this, but it would cost me money.

I researched a couple of options, the winner was: to hook up the hard drive (I chose 750G) to another computer, install Ubuntu on it, and then additionally install sshd, and only then then transfer it to the mediasmart chassis. Luckily, most driver support is built in to the linux kernel, so switching hardware around is not a problem.

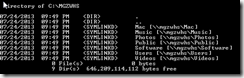

Then I downlod Putty on my windows desktop machine, and use it to connect to the server (formerly named MGZWHS, now named diskarray).

Adding in Drives and Making a Pool

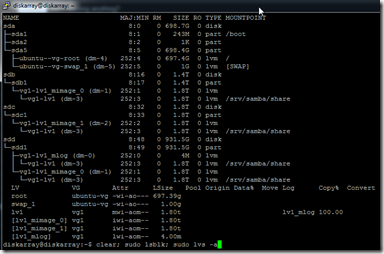

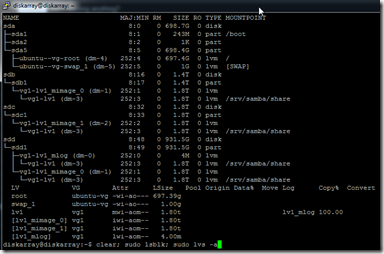

I booted the server up, and checked what drives were available (sudo lsblk), and it only showed me /dev/sda and its partitions. As an experiment, with the server up and running, I hot-plugged in another drive, and looked again.. sure enough, there was a /dev/sdb present now.

I plugged in all the drives, then went and did some research. This lead me to LVM (logical volume manager), and there were a ton of examples out there, but all of them seemed to use hard drives of identical sizes.

At first, I thought I needed to repartition the drives like this guy did – so that I could pair up equal sized partitions, and then stripe across the partitions – But once I got into the fun of it, it became much simpler.

- create PV’s for each disk

- create 1 VG covering all the PV’s

- create 1 LV with –m 1 (mirror level 1) on the VG. This command went crazy and did a bunch of stuff in selecting the PE’s to use for the different legs, and the mirror log …

- create an ext4 fs file system on the LV

The –m 1 “peeks” into the physical volumes and ensures that any piece of data is backed up to 2 separate physical volumes – and as my physical volumes are all disks, they’re on different disks.

Surprise, though – it took about 2-3 days for the mirroring to catch up between the drives. Screen dumps available here: http://unix.stackexchange.com/questions/147982/lvm-is-this-mirrored-is-copy-this-slow

Creating a Samba Share

Creating a Samba Share

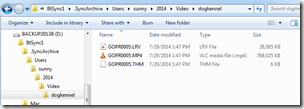

I then approximately followed these directions to create a samba share. However, its not quite right yet – the permissions of created files / the permissions of other files, its not quite matching. However, on my windows machine I can definitely see the files on my server, and its fast.

NB: You can see the .sync files and the lost+found of ext3fs

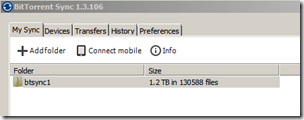

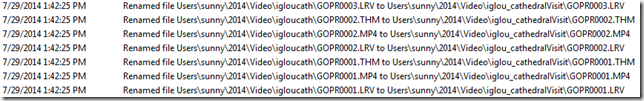

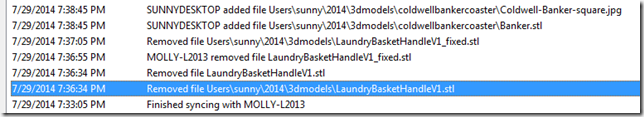

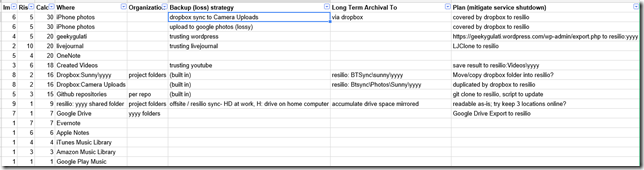

Syncing Via BtSync // stuck

I then followed these directions to instal btsync, and then attempted to sync my 1.2TB of data from my windows box to diskarray. It got part of the way there, 385MB .. creating all the files as user “diskarray” (herein lies my samba file sharing problem) – however, its gotten stuck. Windows btsync knows it needs to send 1.1G of stuff to diskarray .. they both come online .. and nothing gets sent. There are ways to debug this – I know how to turn on debug in windows, but have not yet followed the directions for linux – and eventually, I hope to fix it.

// todo: Simulating Drive Failures

I did attempt a drive failure earlier, but that was before the copy was 100% done – so, it was, shall we say, a little hard to recover from. Later on, I plan on taking one of those drives out while its running and see how I can recover. Maybe even upgrade the 1G to a 2G, if I ever take apart the external hard drive that I used to have on the TIVO. What should happen is the lv would degrade from “mirrored” to “normal”, and the unmirrored partitions would become unused. We shall see.

BitTorrent Sync can be downloaded here:

BitTorrent Sync can be downloaded here: