I’ve been learning a lot of new things with this client – SSIS, SSRS, and now we’ve also implemented a workflow using SSDT. In short: This is a well supported way to check your database schema and seed data into source control. So, looking back, what are the resources that I wish I had known going in?

Installation

Near as I can tell, SSDT is installed if/when you install SQL Server Express. There’s a basic version also installed if you install VS2012 Premium and up, supposedly.

Near as I can tell, SSDT is installed if/when you install SQL Server Express. There’s a basic version also installed if you install VS2012 Premium and up, supposedly.

It can also be installed directly via http://msdn.microsoft.com/en-us/jj650015

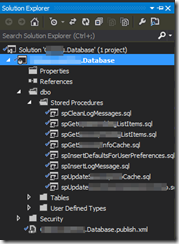

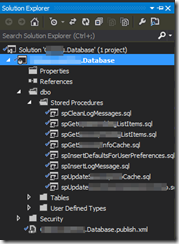

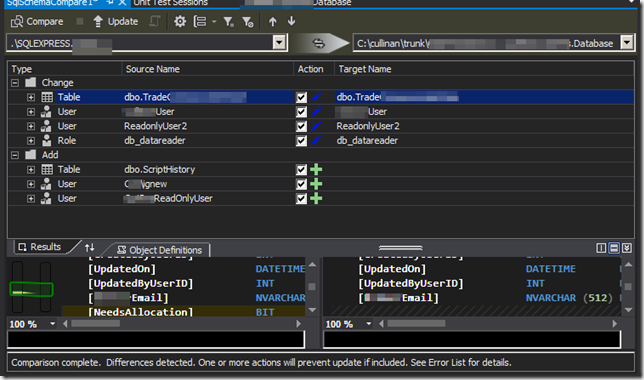

Once installed, you’ll be able to do Schema Compares, Data Compares (useless to me?), and database projects (*.sqlproj). As a lot of people don’t seem to have it installed, I usually put it in a different solution than the main project solution.

Capturing an Existing Database into a Database Project

- Create database project; If you choose to go blank at first then..

- Right click solution, compare schema, SWITCH DIRECTIONS, Update Target

- Hint: Don’t save any user objects, not till you are very comfortable with it.

As of the current version, I no longer have to have one database project, and a different server project (as I did when I looked at it in 2010 or so).

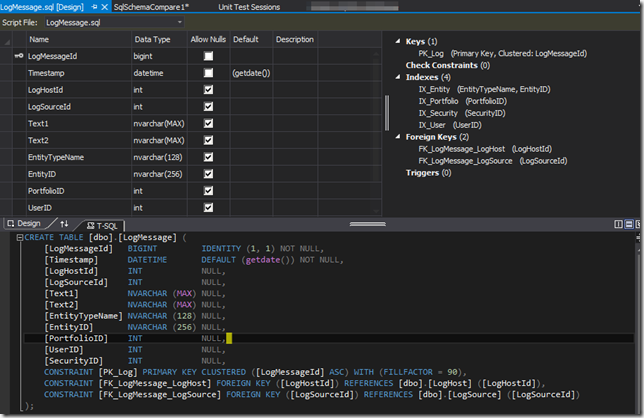

Making Changes

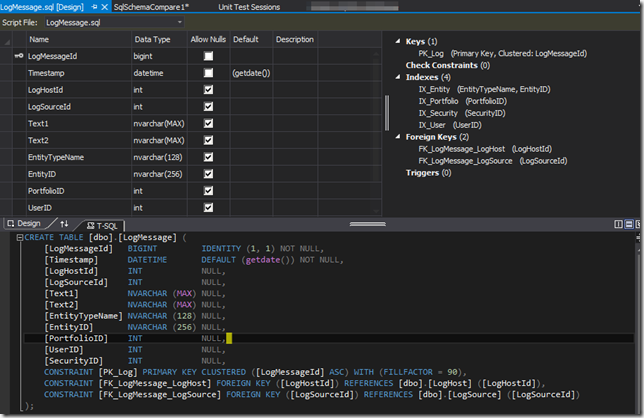

Can either make them in the database and then suck the changes in, or can edit them directly in visual studio – either graphically or in SQL.

A build action will verify every reference – for example, sproc referencing columns, etc. There’s a bit of a confusion around getting casing incorrect that you can turn off.

When you check the changes into source control, they are saved as the T-SQL version. Very self-documenting, diffable, etc.

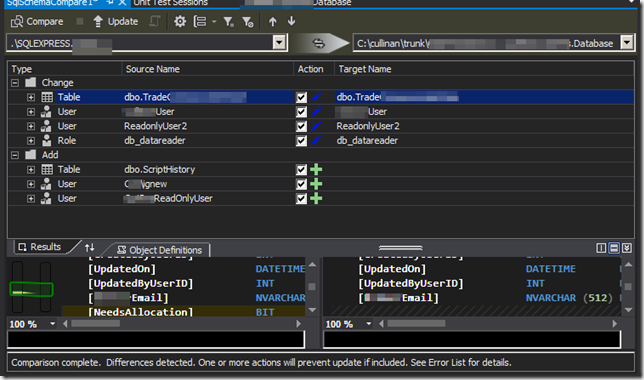

Applying Changes to Local Database Interactive

Use schema compare, select/deselect what you want to update, and hit Update.

This does NOT run pre- and post- deployment scripts.

Applying Database Changes Automated / Without Visual Studio

Compile the project, get a .DACPAC file. Then use SQLPACKAGE.EXE, located usually at C:\Program Files\Microsoft SQL Server\110\dac\bin\sqlpackage.exe. Newlines added for readability.

“If I publish it, what will it do?”

- /action:DeployReport

- /sourceFile:Flintstone.Database.dacpac

- /TargetServerName:BEDROCKCITY

- /TargetDatabasename:FlintStone

- /OutputPath:differences.xml

- /p:DropObjectsNotInSource=false

“What is absolutely everthing that is different between the two?”

- /action:DeployReport

- /sourceFile:Flintstone.Database.dacpac

- /TargetServerName:BEDROCKCITY

- /TargetDatabasename:FlintStone

- /OutputPath:differences.xml

- /p:DropObjectsNotInSource=true

“Do it. (but don’t delete those users!)”

- /action:Publish

- /sourceFile:Flintstone.Database.dacpac

- /TargetServerName:BEDROCKCITY

- /TargetDatabasename:FlintStone

- /p:DropObjectsNotInSource=false

- /p:BlockOnPossibleDataLoss=false

It runs it with pretty colors in a console app.

With Sql Server Management Studio, caveats

In SSMS, under database, tasks, there is “Update Database-Tier Application”. This does effectively the same thing, except you don’t get to choose the options above, so it usually does too much. However, you can save off a change script and then walk the change script by hand.

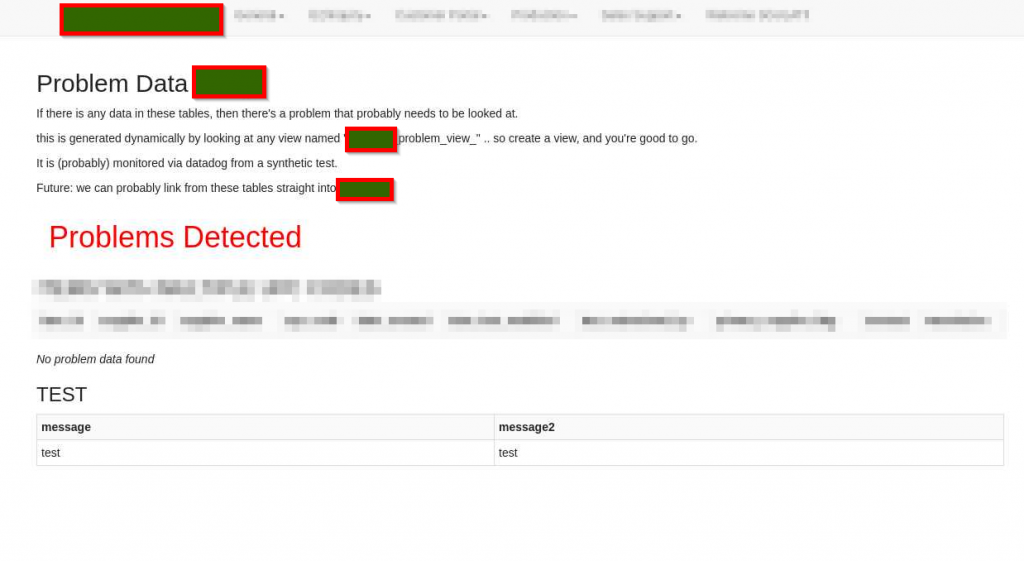

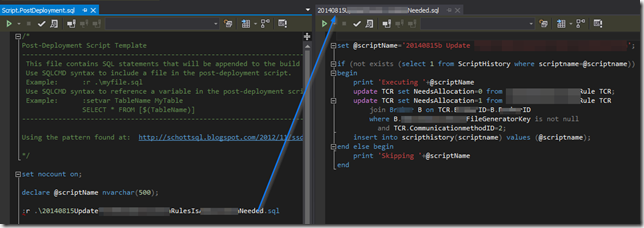

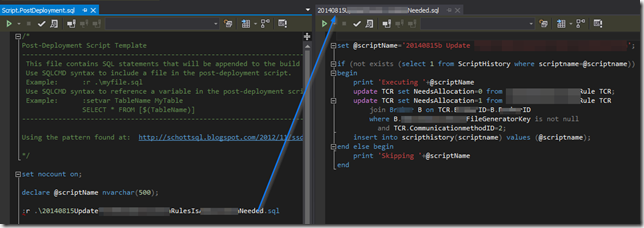

Handing Data Changes

This guy wrote an excellent blog article about it: http://schottsql.blogspot.com/2012/11/ssdt-pre-and-post-deploy-scripts.html. I extended it a little bit:

- Created a ScriptHistory table with a script name (PK) and when it was run, to avoid rerunning

- This is for the one time refactors that move data from an old location to a new location (or going from inferred settings to explicit settings), but cannot be run again, but there is no way to detect if it’s a rerun or not.

Best Practice for Security / Users / etc

I have not had a chance to do this yet, but the general idea is to create roles for permissions, have SSDT deploy the roles, but the user membership is unique per server and is handled manually. (We’re talking about situations like I am developing in COMPANY1’s domain, but the production server is in COMPANY2’s domain and Windows Auth is being used.)

In Conclusion

I watched my dba-idol Richard Svatos struggle with this in 2003.

I’ve tried to solve it in a variety of ways

I’m glad there is a usable, well supported solution now in the ecosystem which I am flourishing.