I love using LINQ (to EF to SQL). It takes care the plumbing of getting data out of the database. But there was one case where I had to swap it out for using a stored procedure.

The Setup

There were several screens that showed similar data, but constrained in different ways. They all shared common code along these lines:

Base:

iQueryable Q // defines the base query that all screens need

Overrides 1..N:

IQueryable Filter(IQueryable Q) {

return Q.Where(… additional clauses…);

// specific to the screen

}

and then calling back to some Shared code:

IQueryable Filter(IQueryable Q, FilterSettings fs) {

// apply standard filters that user could set at the app global level

}

The app used to then take this IQueryable and send it over to a ProjectAintoB() function that did the actual query (.ToList()) and then mapped that stuff out to a viewmodel / list item thing to shove into a grid:

return new GridItem { Description = Q.Description, ClientName = Q.Client.Name, ManagerName = Q.Client.Manager.FullName, Exceptions = Q.Exceptions, NotesCount = Q.Notes.Count() };

Note: this is simplified. The original GridItem class had somewhere around 35 members, across 8-9 tables.

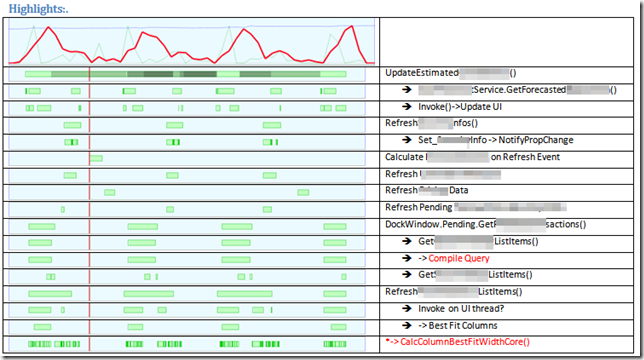

When we first started using the app, this worked well enough. It returned quickly enough to not be noticeable. However, as the app started to grow, things started to get noticeably slower, so we went onsite and did some profiling, and this is what we found:

Actual screenshot/image from original analysis document around the problem

- At the client site, it was a lot longer, with CPU pegged at 100% for 2-3 seconds.

- Turns out, most of that CPU was LINQ attempting to build the query, not actually executing the query.

- I didn’t notice this on my Dev machine because of superior hardware and multiple (8) processors. At the client, most of their processors were 2 cores, and the other core was busy doing client-ish stuff.

- This was with EF 5.0 with .Net 4.0, before they had “cached compilation of queries” (.Net 4.5). I tried upgrading to .Net 4.5; but still had problems.

- After the query was executed, there was a network spike as the data returned from the server

- Turns out, by having 9 tables returning, and the way EF work(ed|s), there was a LOT of repetition – the same Manager FullName across 5,000 rows, etc.

- This lead to a large network payload of returned data

- This was a killer over VPN. (Which only affected me, the developer, but I am important!)

The Solution

We did it in two stages:

- We kept the original concept of an IQueryable that gets modified by various layers to get down to the data that needs to be pulled back.

- We executed it, but only pulled back a single item: select g.GridItemId. On some screens, this was 50 items; on other screens, 10000 items.

- We called a stored procedure which took in a TVP (table valued parameter) of id’s, and returned multiple result sets:

- one result set for the grid items + external id’s to other tables

- one result set for all the Clients referenced by these grid items.

- one result set for all the managers referenced by these grid items.

- one result set for all the Notes referenced by these grid items.

- We read these in, and directly constructed our grid item from these results.

And it worked wonderfully.

- Average compilation time at the client site was down to 50 ms (most of the complexity was joining in to all the other tables to populate a grid item)

- The second call to the sproc finished within 20-40ms or so

- The payload across the wire was much smaller.

Here’s a screenshot of a paragraph I wrote in my excitement at getting it to run so much faster:

I also recorded a video of a before screen, and the after screen. I can’t show that to you, but it was a hit.

The Result

This, and other performance enhancements over the last 6 months – according to the client last week:

Client: Yes! Actually, 2.0 is faster than 1.0 now! We dislike going back to 1.0.

Me: