I have been on a coding adventure for the last two days.

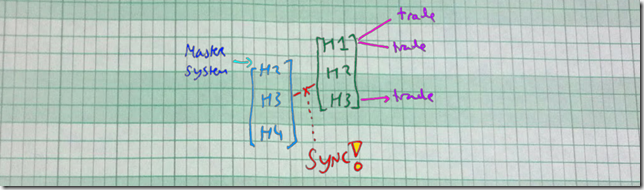

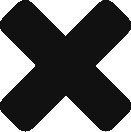

Customer has a system, which involves holdings, and then proposed trades on those holdings. However, there’s also a master system that sends a new list of holdings on a daily basis. The master system does not know about the proposed trades, it says “here’s the new list”.

The algorithm when updating the list of holdings to the new holdings went like this:

- delete anything that doesn’t have a proposed trade on it

- copy in the stuff from master, creating new ones, except…

- if an existing holding is found, instead of creating a new one, update the holding to look like what the master says it should (with various keys to try to grab the right one)

The Problem

There was a holding of Quantity = 20 that had proposed trades of –10 against it. The incoming holdings were of quantity 3 and 15. It updated 20 to 3, causing a math imbalance in the system.

Further problem on more investigation

It was also iterating the incoming list, so if I had trades against a holding H1, and H1 (or something like it) was not included in the new list, then the trades would get left behind against a stale holding.

My Solution Take 2

- Delete any holding without a proposed trade on it

- Figure out our maximum id so far

- Always insert all data from master as new. (Now we have duplicate copies)

- Walk through all the old holdings and proposed trades; try to match the proposed trades up against the new holdings

- If no match found, log it and delete the proposed trade

- Having moved all the proposed stuff over to a new parent, now delete all old holdings <= maximum id (no more duplicates)

Why I thought it was Clever

Suppose the load fails.

Since I’m only either updating or deleting proposed trades, I will not have any duplication of proposed trades.

Since I’m deleting all holdings prior to where I started inserting the new holdings, as long as I successfully complete a load, I will delete any old bad data from a previously failed load.

The Matching Algorithm

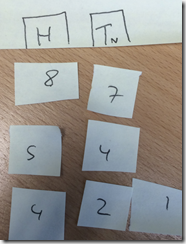

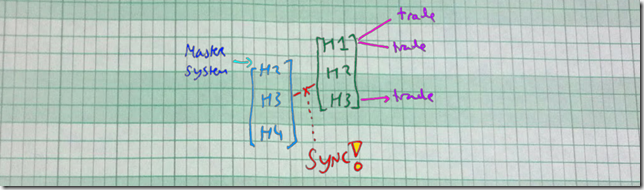

The general idea is: Sort “available buckets”, sort “Proposed Trades”, and then start matching trades to buckets.

I tested this out on paper – as in, I cut out little pieces of paper, and tried the different options; I determined sorting low to high on buckets and high to low on trades was my best bet.

I tested this out on paper – as in, I cut out little pieces of paper, and tried the different options; I determined sorting low to high on buckets and high to low on trades was my best bet. - I used a fit option of f(x1,y1,x2,y2):decimal to give me, for any trade, which bucket it would best fit in (after sizing was taken care of). (the X’s and Y’s are acquisition dates and execution prices, both of which can be “touched up” on the master system, making an exact match every time non-feasible)

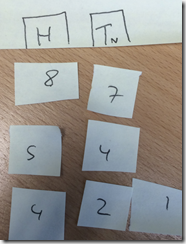

To Translate This To Code

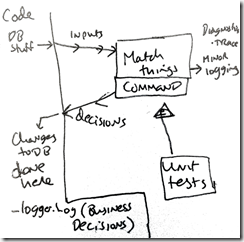

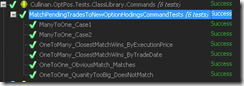

Because the matching stuff could get complicated, I made its own little command for it (see command-query separation).

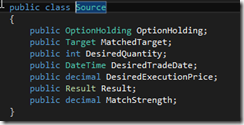

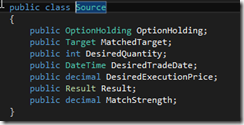

I was not shy about creating more DTO’s for the specific inputs and outputs needed by this command.

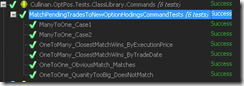

I wrote enough tests to verify that it was doing the right thing. When I do unit tests, Iassume only developers will read them, so I don’t spend a lot of time making the names super-descriptive. Some-descriptive, yes, not super-descriptive.

And then I wired it up. Turns out, I wired it into location #1, and that turned out to not work – transactions, entity framework, new transactions, missing ID’s, etc. So I ended up wiring it into location #2 (after the fact), and that worked a lot better. Good thing I had black-boxed its meaning, it was easy surgery.