Yesterday, I passed my AWS Certified Developer Associate exam. I started studying for it two weeks ago.

Yesterday, I passed my AWS Certified Developer Associate exam. I started studying for it two weeks ago.

Actually, no, I started studying for it a year ago when I started using AWS. And at the time, I thought I was going to take the Certified Solutions Architect Associate exam, but we went for this one because its easier, and I had two weeks to study for it.

Why two weeks? My company has been going for an AWS Partnership thing, and for that we need two certs. It wasn’t a immediate priority… until some stuff came up which really affected some of our value. We raised the flag, this needs to be a priority. Schedule it and take it, and lets send two people so that if either one passes, we’re good. We both passed.

What did I learn in those two weeks about how to study for the exam? If I were to go back in time to younger self, what would I tell him?

ACloud.Guru, but not like that

https://acloud.guru is VERY good. I’m keeping my subscription to it so I can listen in to some of the master-level courses during my commute. However, I had prescribed something like:

- Listen and watch all the videos

- Take all the Quizzes

- Take the practice exam

- Go read other boring stuff.

Inefficient and Fear Based.

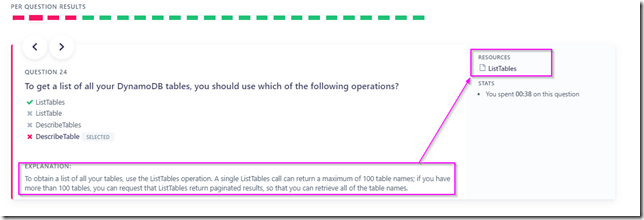

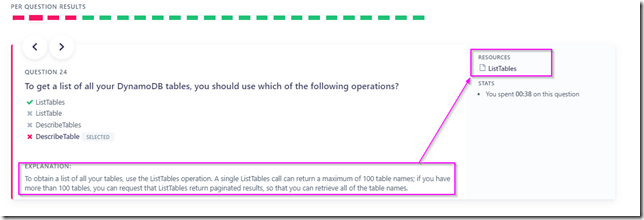

Turns out, if you get a subscription, they have this thing called an Exam simulator (beta). And at the end of this you get to see what you did wrong (as well as what you did right), and an explanation of the thing, and a link to more resources:

The suggestion is to attack the problem from a different angle

Thing is, both the real exam and the practice exam asked some pretty in depth questions that the video instruction does not directly cover. Ryan does say, “Make sure you read the FAQs” and that’s 100% for real. You gotta read the FAQ’s.

However, the FAQ’s themselves reveal the core ideas central to the service you are reading about. As do the videos. My suggestion is that once you are comfortable, you get denser information faster from the FAQ’s.

I also found that the developer exam really wanted to know if you had used something. Called some methods. And if you hadn’t .. like me, I just barely used scan and query mostly I was being a sysop and using terraform to set things up for others .. then reading through the actions, attributes, headers etc – gives you that feel of what actually working with the thing is like. (Assuming you have plenty of experience and have done many things in the past). You’ll get into the mind of the designers of the system, and from that, you can infer all kinds of stuff.

Some Of My Notes

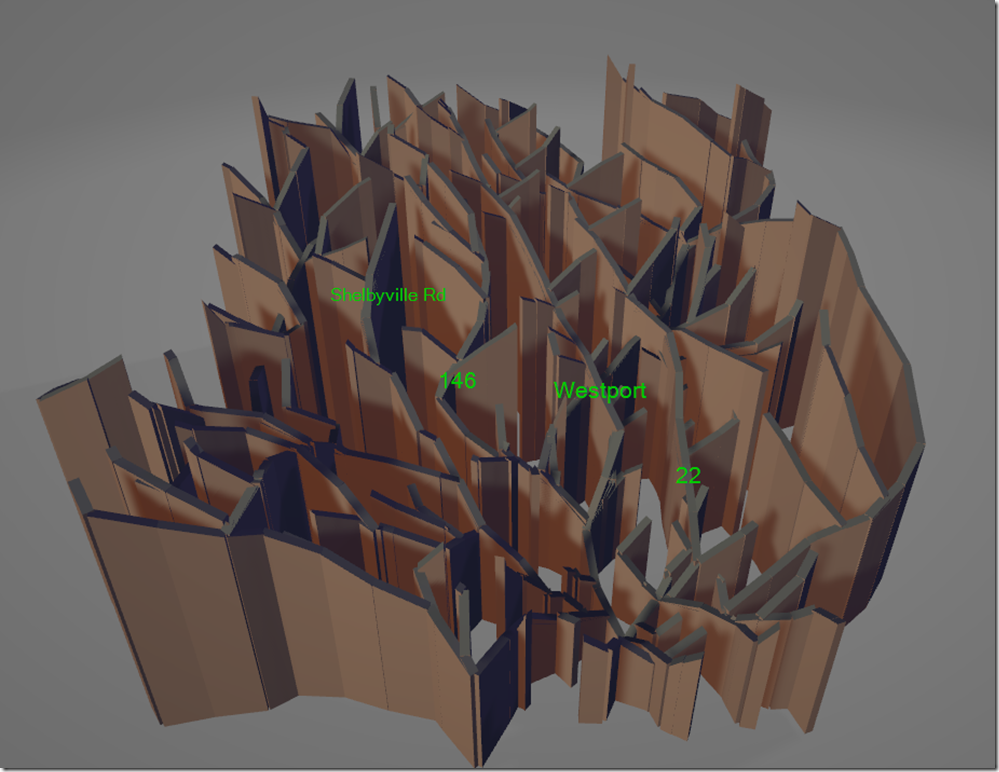

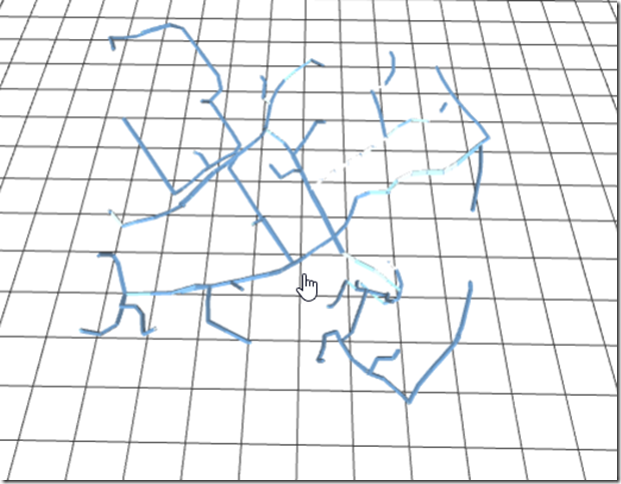

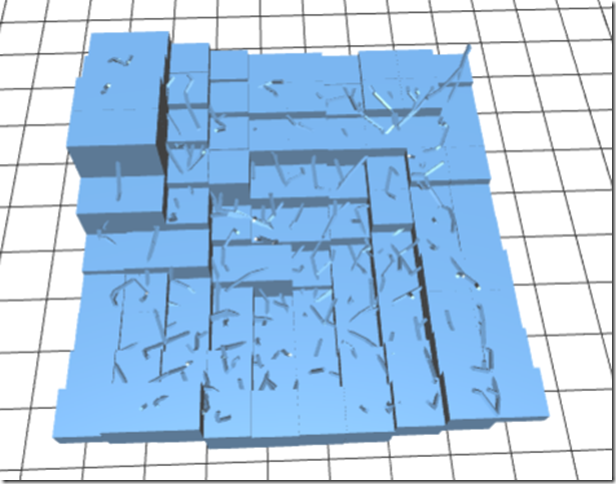

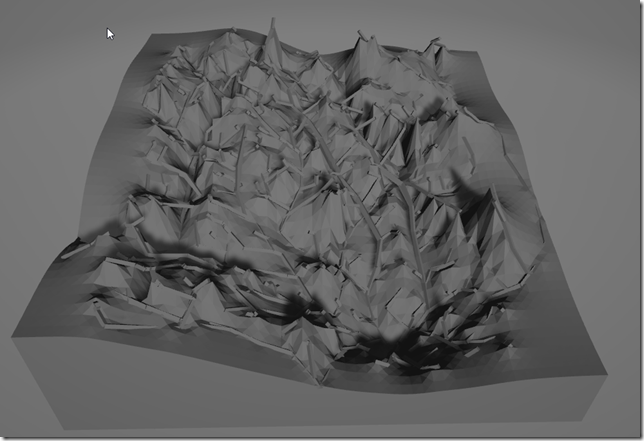

I started out taking notes on Paper. Then I took notes in Google Docs. Then I switched over to sheets.

I started out taking notes on Paper. Then I took notes in Google Docs. Then I switched over to sheets.

I’m a visual person, and I like organization, and having a big grid where to “store” information (human brain is optimized for location tracking) was very helpful for me. If I got a concept wrong, I could go back to the place where that information was hiding on my sheet… it would probably help to have a background “image” to place information even better. I’ll do that next time.

The Actual Exam

Here’s what was different from what I expected: Location: Downtown Louisville, Jefferson Tech I think

- Parking was easy to find early enough in the morning. I vavigated straight to a parking lot, and paid for the day (less worry!), about 2 blocks away.

- The proctors were very nice friendly people who gently guided my anxiety stricken self through what I needed to do.

- Much to my surprise, I got there an hour early, and they invited me to take my test early. I was done by the time my actual start time came around.

- The actual exam was pretty much at the same level of detail as the acloud.guru exam was, except for more “you really gotta know this” choose all that apply type questions. I detected at least one stale question on the test, which I commented on.

My weakest areas: Federation, and Cloud Formation, I think. Makes sense, haven’t really had to do that, and we use Terraform for the same task.

Woot woot. Okay, gotta go run a race now.