My wife pulled me out of the office today, to take her to the State Faire. However, right now, she’s meeting with her coffee girls. So: I can put some stuff that’s been brewing in my mind into a blog post.

Working with somebody I had not worked with before, I am learning a lot of interesting things:

SQL Loops

We’re working on getting data from hundreds of sites, pooling it, aggregating useful information, and then reporting it. The size of the pooled data is around 25 million rows, and will grow by several million a year.

When we change how the aggregation works (new math, new metrics), we have to delete all the aggregated data and re-aggregate. Due to foreign keys and such, truncating the tables is not an option (from a sproc, at least). A straight delete took forever and gave us no feedback.

My coworker used this construct to provide a way to measure delete progress:

Version 1:

while exists(select top 1 TrooperId from Trooper where TrooperId > @TrooperID)

begin

select @TrooperID = Min(TrooperId) from Trooper where TrooperId > @TrooperID

DELETE FROM [aggregated].[Statistics] where TrooperId = @TrooperID

end

I have never done a while statement in SQL before. Very elegant. It got around most of the problem of trying to delete too much without any feedback.

Even better, the next time I looked at the source, I found something else:

Version 2:

WHILE (1=1)

BEGIN

DELETE TOP ( 10000 ) FROM [aggregated].[Statistics]

IF (@@ROWCOUNT = 0)

BREAK

--WAITFOR DELAY '00:00:02'

END

Even simpler!

A Case for Simpler Dependency Injection

I’m used to code like this:

private readonly DbConnectionProvider _dbConnectionProvider;

private readonly ITrooperGetQuery _TrooperGetQuery;

public TrooperAccessGetQuery(DbConnectionProvider dbConnectionProvider, ITrooperGetQuery TrooperGetQuery)

{

_dbConnectionProvider = dbConnectionProvider;

_TrooperGetQuery = TrooperGetQuery;

}

Any dependencies your code has on other components, get “injected” into your constructor. Usually used along with a Dependency Injection library; to call this code, you would do something like:

var query = IoC.Get<ITrooperGetAccessQuery>();

Or, alternately, would in turn take a IITrooperAccessGetQuery parameter in their constructor, and rely on the person calling them to make the decision…

.. bubbling up all the way till you get to some magical code in the app that ties everything together. Somewhere else. This has always annoyed me.

With this approach, seems to be that a) constructors get really large and unwieldy, and b) “what’s going on” is spread out between where DI is set up and where it is consumed(*)

(*) Some would argue that’s the whole point of DI.

Anyhow, I found this style code which needed access to the TrooperAccessGetQuery:

private ITrooperAccessGetQuery _tagq;

private ITrooperAccessGetQuery TrooperAccessGetQuery

{ get { return _tagq ?? (_tagq = new TrooperAccessGetQuery()); } }

What this says: “Hey, my system is simple, I don’t need a master system putting everything together. My components know what components they normally depend on, but you have a chance to override. If you call the default constructor, I’ll give you a component with all default components.”

Thus, I added a default constructor to my component:

public TrooperAccessGetQuery()

: this(new DbConnectionProvider(), new TrooperGetQuery()) { }

And life proceeded. Its pretty, and avoids ugly constructors, and arcane app bootstrap code, and having to choose a dependency injector.

Dapper Query Multiple

I had not seen this construct before. Useful:

public static Counts Get()

{

const string sql = @"

select count_big(1)

from imported.TrooperStatistics with(nolock)

where is_summarized=0

select count_big(1)

from imported.TrooperStatistics with(nolock)

select count_big(1)

from aggregated.StarDateHash with(nolock)

where is_aggregated_total=0

select count_big(1)

from aggregated.StarDateHash with(nolock)

where is_aggregated_time_interval=0";

var connectionProvider = ObjectFactory.GetInstance<DbConnectionProvider>();

using (var connection = connectionProvider.GetOpenConnection())

using (var multiple = connection.QueryMultiple(sql))

{

return

new Counts

{

Total = multiple.Read<long>().First(),

NotSummarized = multiple.Read<long>().First(),

NotTotaled = multiple.Read<long>().First(),

NotCalculatedTimeInterval = multiple.Read<long>().First(),

};

}

}

I learn something new every week. Thanks, JM!</P?

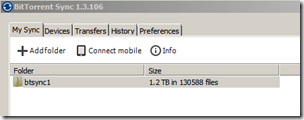

BitTorrent Sync can be downloaded here:

BitTorrent Sync can be downloaded here: