I implemented this again today, and realized it was a pattern that I fall back on regularly.

When dealing with a system with several states, and actions to be performed at each state, I usually break things up into a state diagram. Inevitably, I end up with a “State” field, the the question becomes what to put in the state field.

Take an example of sending an invite to a user to come in and use an app.

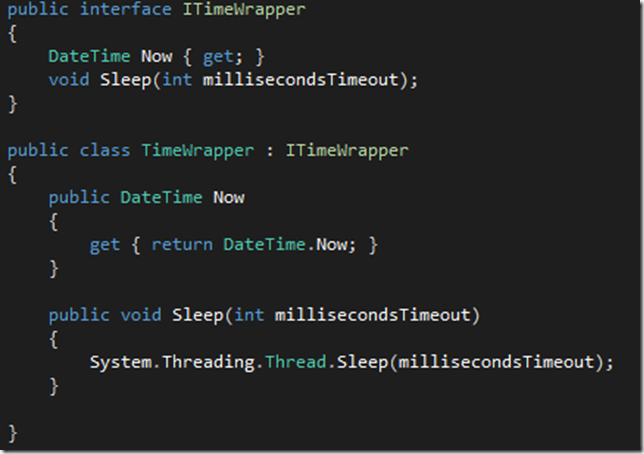

What I do not do:

Alter Table Invitation

add state int not null default(1)

enum State { Start = 1, Email = 2, Consumed=3, Expired=4 }

What I do (approximately):

alter table Invitation add

state varchar(25) null,

stateError bit(1) default(0) null,

stateTimestamp DateTime default(getdate()) not null

public static class InvitationState {

public const string SendEmail="SendEmail";

public const string WaitInbound="WaitInbound";

public const string InviteConsumed="InviteConsumed";

public const string InviteExpired="InviteExpired";

}

Why?

- It is my experience that for any flow, a future spec will come up with will add new states in the middle of that flow. If you go with an int value, you end up with the flow being 1->9->5->6. By going with a varchar, its much more obvious when doing a select from the db, and it allows for the addition of new states.

- A previous employer loved using CHAR(4) and state names like “STRT”,”MAIL”,”CONS”,”EXPR” because Oracle stored the Char(4) like an int. Very similar to Apple’s Resource Fork’s from back in the day.

- I’m not so worried about the speed difference it makes. I feel that the flexibility it gives makes up for any performance loss.

- Either we have an action state, named with a “Verb Noun”, or we have and end state, named with “Noun Verb”.

- The state names are based on what IS happening or SHOULD happen to the row, not what has already happened, except for the end states.

- For any action state, if the attempt at performing that action fails, I set the stateError bit to 1. This lets me know I need to do something to fix that; when its fixed, I can change the stateError back to 0.

- In large batch systems, I write the console based action-taker with an override switch: to work on a specific Id, even if the errorState=1; and to do it in a transaction but not commit the transaction.

- This lets me do test-things with rows that failed, while the main batch system processes all the other non-errored rows. Once I get it to working, I tell it to go ahead and commit, and the system continues on its merry way.

- The system only deals with the states that it knows. This lets the technical OPS team (whoever that is) use the state field for its out-of band processes, stuff like setting the state to ‘Ken’, for example. Because Ken is the man who knows what is up with those rows.

- In really large critical systems, there’s an audit table and a trigger that auto-inserts any changes to the audit table.

The result of this is, I get Christmas cards in the mail from former clients with phrases like “Still amazed by your code!!!”. 🙂

Hope this helps.