My wrists have been numbing more lately. At my wife’s suggestion, I’ll bring it up with my GP at my appointment next Wednesday. Meanwhile:

- Switch to using the trackball with my left hand.

- Switch keyboards.

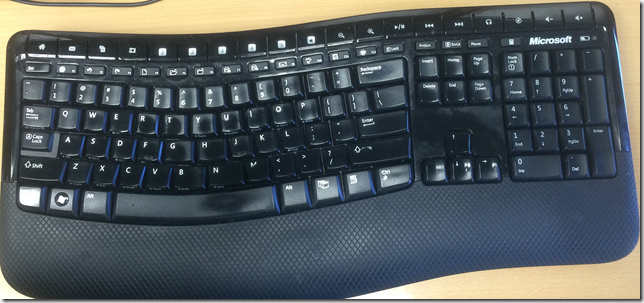

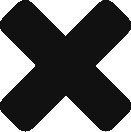

This is the keyboard I’ve been using for the last 2 years or so – Microsoft Wireless Comfort Keyboard 5000. It has some level of curve in it, however, I still have Ulnar Deviation and Pronation when using it. The keyboard action is very light, and its been good to me for a while. It is wireless .. less cords to deal with.

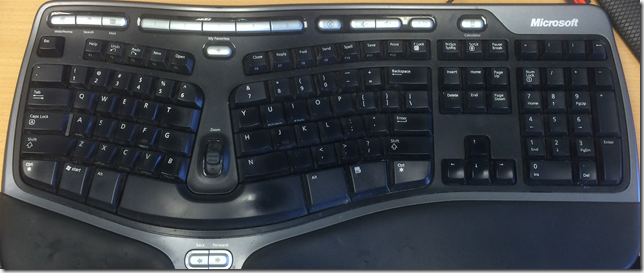

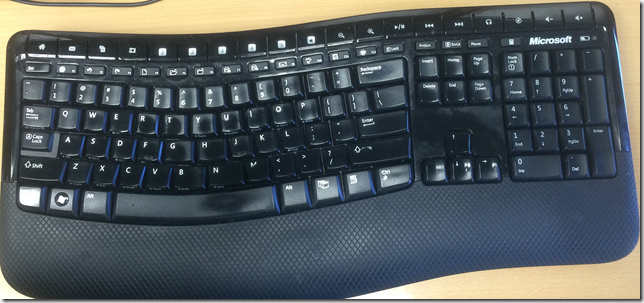

This is my older, wired, USB keyboard. Microsoft Ergonomic Keyboard 4000 v1.0. I switched back to it, but what I find is that the keys take too much pressure to press.. and my hands are tired. I couldn’t do it. The keyboard is also larger, which means I need to stretch my fingers further to press the keys. However, Ulnar deviation is a bit better.

Just for fun, I tried using both at the same time:

This worked surprisingly well. It was fluid, except when I needed to switch to the mouse. I’m used to my keyboard being a single keyboard, and something about having two keyboards confused me; I didn’t know which hand to move. I guess I know my “home” position based on the distance between my hands, and if I move one hand, I cannot find home easily without looking.

I did some research and decided to order this keyboard, it should arrive next week sometime:

In the mean time some of the things I can do are:

- type at a deliberate rate. (not look at keyboard)

- type with one hand only. (requires looking at keyboard)

- I can also just use one finger from each hand. By doing this the entire hand moves, and ulnar deviation is removed. It is also quite fast. (requires looking at keyboard)

[youtube=http://www.youtube.com/watch?v=xLR0Nwh1l6o&w=448&h=252&hd=1]

What did I type?

This is me typing with one hand.

In light of Hanselman’s new app which allows dictation from an iPhone, I wonder if there are any programmer-specific dictation tools? I have looked at vimspeak, however that mostly addresses the control aspects of using vi. Think of it:

public int Add(int a, int b) { return a+b; }

“public int shift A d d paren open int a comma int b paren close curly open return a plus b semi close curly” ?

Other folks have talked about it over here: http://productivity.stackexchange.com/questions/3605/how-can-we-use-dragon-naturallyspeaking-to-code-more-efficiently

The problem is one of lost context. In programming we are trying to be specific about contexts – usually using parens and curly’s as visual queues – for example, that “int a” and “int b” are parameters of Add. The solution seems like it would be codifying contexts into spoken word, by adding extra keywords to tell the parser what we are trying to do. For example:

“function <name>” => starts adding function, puts you in function mode; creates defaults; additional things make sense in this mode:

- “parameter x”

- “returns [type] ”

- [visibility] “public” | “private” | “internal” // keywords can infer that we meant visibility

- “body” // go to editing body mode

So theoretically, “function Shift a d d visibility public returns int parameter a of type int parameter b of type int body return a plus b”, or more specifically:

| What is said |

What the code looks like (using underscore to denote cursor) |

| function |

object a01() { } |

| Shift A |

object A() { } |

| d d |

object Add() { } |

| visibility |

object Add() { } |

| public |

public object Add() { } |

| returns |

public object Add() { } |

| int |

public int Add() { } |

| parameter |

public int Add() { } |

| a |

public int Add(object a) { } |

| of type |

public int Add(object a) { } |

| int |

public int Add(int a) { } |

etc. Ie, you map the spoke word into meanings that then get applied to the editor.

You could even navigate “into” and “out of” contexts by using clicks and clucks – sounds that are easy to make yet are definitely not letters. “down” and “up” would apply at the context level – ie, statements, or functions.

It is an interesting problem. It could be a fun thing to solve. Any takers?

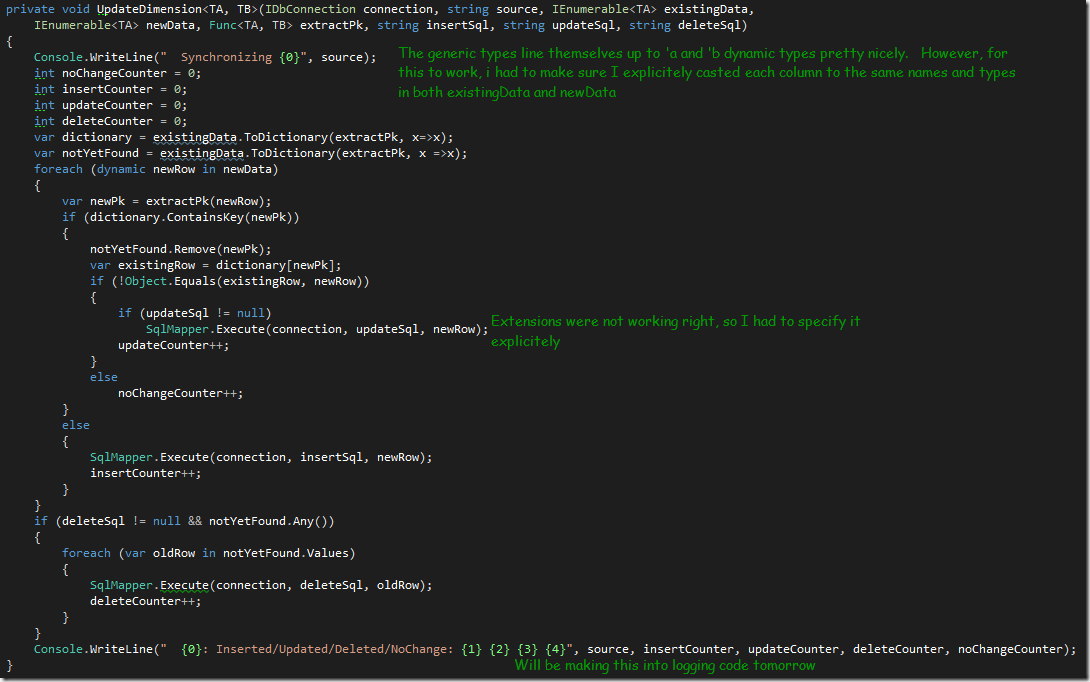

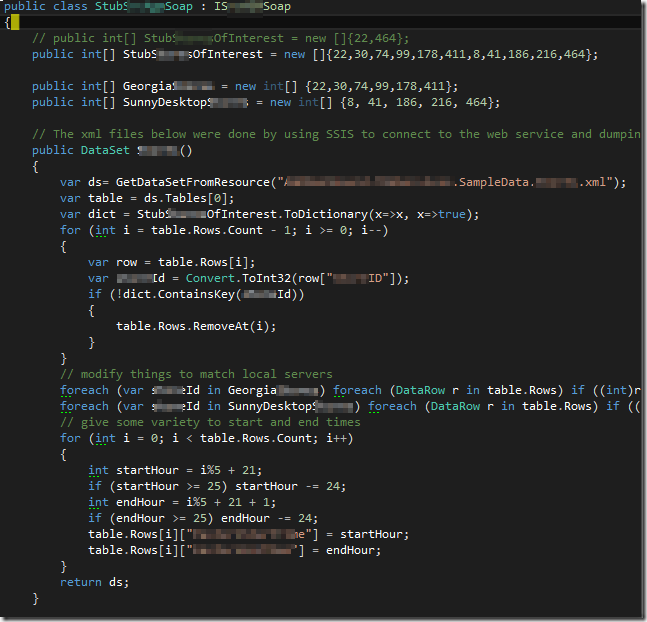

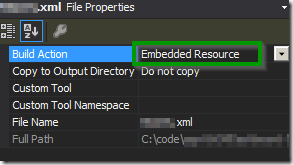

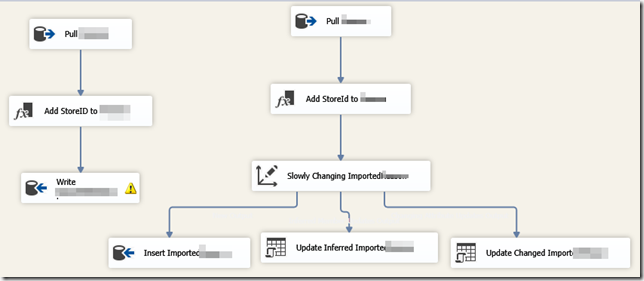

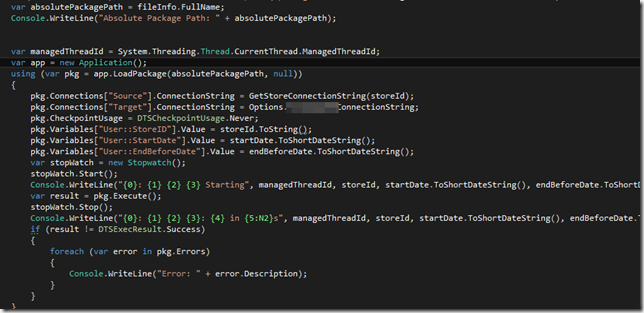

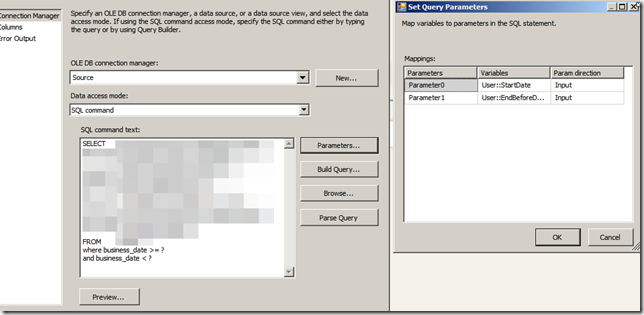

My first thought was to use SSIS to extract the data from the web service, and then do a dimension load into my local tables. But I hit a problem: When the SSIS control task saved the DataSet, it saved it as a diffgram:

My first thought was to use SSIS to extract the data from the web service, and then do a dimension load into my local tables. But I hit a problem: When the SSIS control task saved the DataSet, it saved it as a diffgram: