One of those sanity moments where I look at “what am I doing”, and are my priorities in line. (TL;DR: they are not)

My wife and kid went out of town for a week, so I had the opportunity to schedule myself any way I choose. I have a comparison of “with wife and kid” and “bacheloring it”:

Wife And Kid (Monday-Sunday)

Bacheloring (Tuesday-Saturday)

Ob ser v at io n u s

(observation + obvious; black=both; purple=1; blue=2)

- My work hours were more scattered when the wife and kid were away

- Work spills over to the weekend if I can’t get all my hours in during the week. And that is what happens when sleep intrudes into work; which is what happens when entertainment intrudes into sleep.

- I had more “white space” – time that I’m not doing anything in particular, just being – when the family was away.

- I spend a significant amount of time in pink (hanging with wife) – I like this.

- I spend a significant amount of time in green (entertainment, hobbies) – I like this.

- I do stay up too late doing hobby stuff and watching netflix (entertainment) – at the expense of squeezing sleep.

- I spent more time eating when the family was away:

- Mostly, I was cooking up (measuring) batches of soylent and grilling

Not So Obvious

The timeline is not zoomed in enough to see some of the small stuff:

- I walked the dogs every day while the family was gone. But it only took 15 minutes.

- I didn’t get to the gym while they were away. That’s because my gym time and dog feeding and peeing time conflicted.

- I spent a lot more time doing errands – cleaning stuff, fixing stuff – while the family was gone.

- I did not nap at work while the family was gone.

Soylent: Not So Good News

I bought a week’s supply of PeopleChow 3.01 from Doug, with the intent of living on it while the family was away. I did two trials, with my glucometer:

Trial #1: 1/3 the batch; 100g carb:

- before: 89 mm/l

- 30 minutes after: 138

- 60 minutes after: 168

- 120 minutes after: 158

Trial #2: 1/6 the batch, 50g carb:

- before: 93

- 30 minutes after: 158

The goal? My goal? is to be under 120 after 2 hours after a meal. I couldn’t do it – too many carbs.

I tried altering the formula to use less corn flour; the result was unpalatable (puke worthy). I gave up on it.

But what this did was, I started measuring my blood sugar again.

Me: Not So Good News

I’m a lot less able to withstand a carb load than I used to be able to. Or so it seems. Today was 40g of carb in some Indian Lentils:

- Before: 110

- 2 hours after, even with a walk: 158

And, I can feel it. I feel puffy, flabby, out of energy, tired.

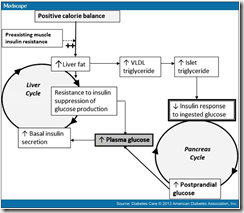

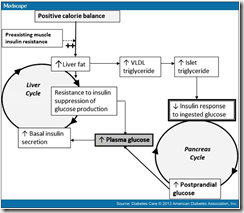

The Twin Cycle Hypothesis of Etiology of Type 2 Diabetes

I did some reading to see what’s new these days in diabetes stuff. I came across this article, with this pretty cool picture (I could not find a public link, so this is a screenshot):

I did some reading to see what’s new these days in diabetes stuff. I came across this article, with this pretty cool picture (I could not find a public link, so this is a screenshot):

In a nutshell, it gives me an answer to “what the heck is going on” – and a glimmer that it gives me is, “loose enough weight, the cycle becomes less worse.” It focuses on some T2 Diabetics who got gastric bypass surgery and radically altered their body fat content and wham! some of the diabetic cycle vanished.

In the past, I had gotten down to 165, which for me is a BMI of about 22. I felt a lot better then, I was running, working out, having a blast. But I did not cease to be diabetic.

Then I saw this line here:

For me a BMI of 19 would be 130 lbs. That’s 50 lbs less than I am right now. 30 lbs less than what I had aspired to get down to at my best. I have to get down there, AND STAY DOWN THERE, because I’m pretty sure that the last bits of fat to get used up are going to be the ones which are the most troublesome. Or, I could continue to be reasonably happy yet declining.

Maybe I didn’t drop enough weight that time?

How Hard should I strive? After all, I could say I’m an old man. I’m past my prime. I’m beyond the life expectancy that humans had in the middle ages…

Bottom Line: I have a Choice to make

My former sponsor’s favorite word – Choices. Ah, the bliss of not knowing you have a choice.

I could make a choice to get healthy again.

Which would mean, I need to put exercise back in my schedule. And logging food.

Which would mean, something has to go.

What Goes?

- It won’t be sleep.

- It won’t be work – at least, not yet. I’m not independently wealthy yet.

- It won’t be [all of] hanging with the wife.

- It won’t be Recovery work.

- It would have to be entertainment and hobby. There isn’t anything else to let go of.

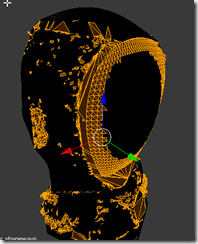

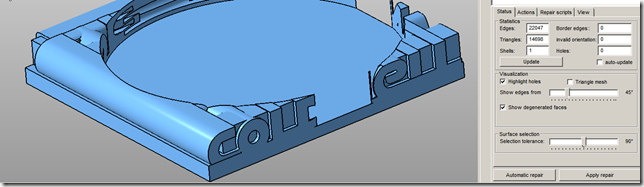

I’ve been overdoing it. My brain gets so tired, I just want to numb out with mindless TV watching.. or, my brain gets so obsessed, I have to solve this problem now! (3d printing, blender, and shapeways – I’m looking at you).

So, sometime soon, expect that all my 3D printing stuff will come to a stop. Or, it will be relegated to one experiment per weekend (or some other healthy amount). A check at the posting queue for this blog – actually, the queue is empty right now. When I post this post, there are no others in the queue after it.

I guess I’ll write one more post for “Shelving the Hobby” – making a list of the irons I have in the fire, so that I can let them go temporarily. Or not, I can list them here:

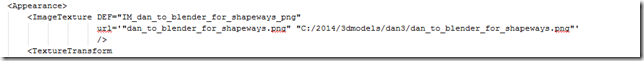

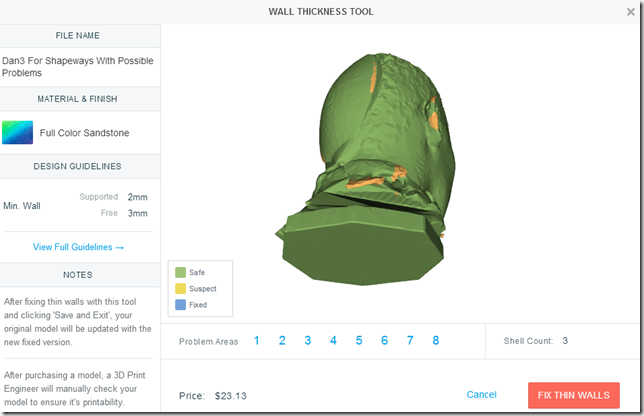

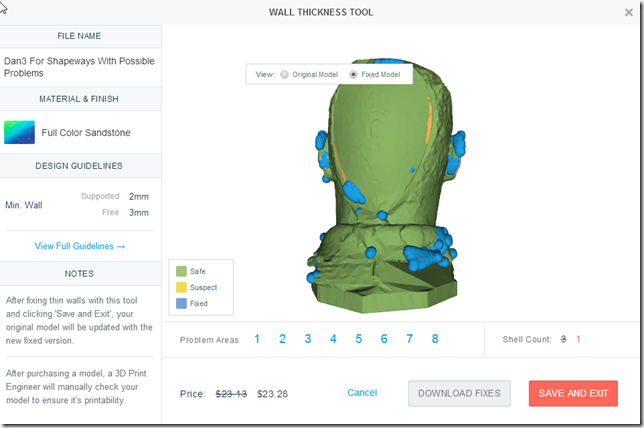

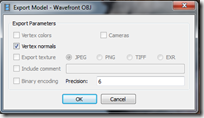

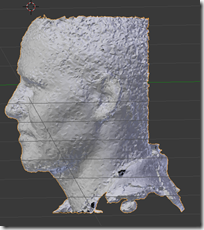

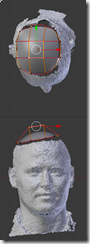

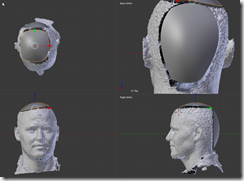

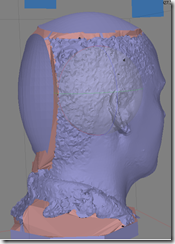

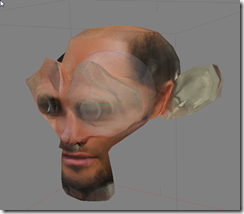

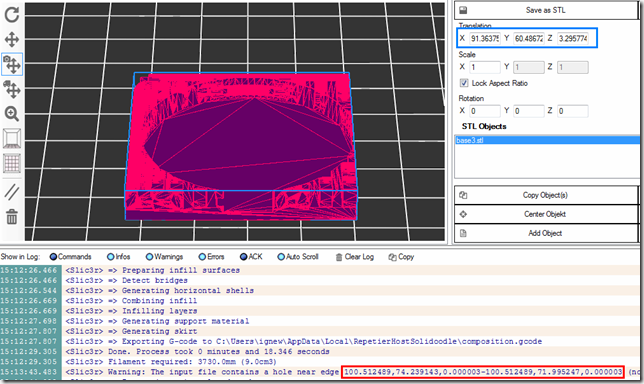

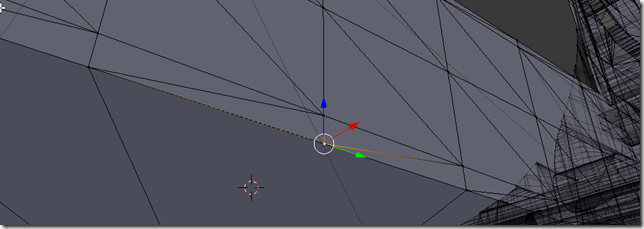

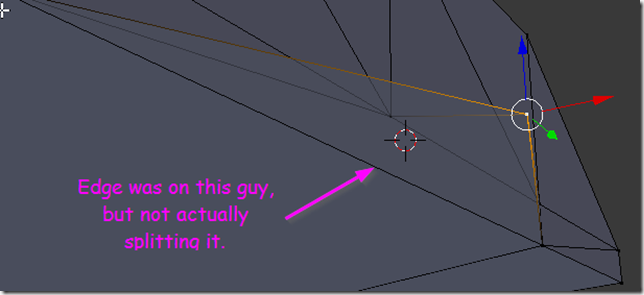

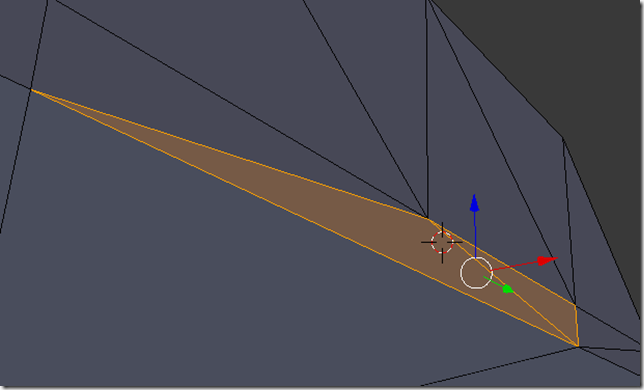

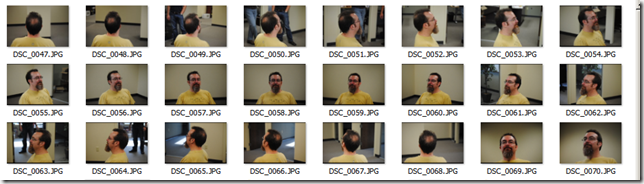

- I have a solution for Agisoft to Blender to Shapeways which involves decimation down to 500, subsurf, and edge creasing. I have a color print ordered of that.

- I have a solution for printing initials cubes without supports; I have to slice it, but basically I print it at a 45 degree angle so that all letters are facing “up” (kinda). I have not actually done this yet. I have ordered a small (20cm) cube from Shapeways to see how well their printers do the job.

- I might be doing some silver jewelry via Shapeways involving people’s initials.

Done. Shelved, will post pictures when they arrive.

I think I have a date with a gym tomorrow morning. And if my work hours suffer, well, that’s what Sunday afternoons are for.

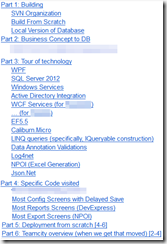

So, here’s my table of contents that I’m filling out (sure to change as it progresses). I’m currently working on the “Build From Scratch”, which, to avoid any future awkwardness, means “Create a blank windows 7 virtual machine, Install visual studio and Compile.”

So, here’s my table of contents that I’m filling out (sure to change as it progresses). I’m currently working on the “Build From Scratch”, which, to avoid any future awkwardness, means “Create a blank windows 7 virtual machine, Install visual studio and Compile.”