Update: This entry was re-posted on my company blog, here: http://ignew.com/2013/02/16/developingasateam/ — with some updates.

In October, I started on my current project as a solo affair. In November, we went up to 2, and then shortly afterwards 3 developers, and we had to figure out how to work together effectively.

I’ve had some good experiences in the past working as a team, and some bad ones. I was eager to try to craft a good working experience, and with the help of my colleagues, I think we mostly succeeded. We just did an internal presentation to my company on the subject, so now it feels right that I can blog about it.

We started collaborating on Campfire

At one person on a project, well, there’s just myself and myself. Not much to do there; I can email the customer questions, I can save the emails I get back for stuff that’s important.

At one person on a project, well, there’s just myself and myself. Not much to do there; I can email the customer questions, I can save the emails I get back for stuff that’s important.

At two people, I can communicate with my teammate over IM (if they’re in), or yell across the hall. Maybe try to maintain a wiki, and forget to do it. Very often, one person takes primary in client communication, and the other person takes secondary.

At three people, things get a bit different. Multi-person IM can be a pain. One primary and two secondaries leads to a lot more communication overhead for the primary.

Enter Campfire. http://campfirenow.com/

With Campfire, communication can be asynchronous – as long as everybody agrees to check the history of campfire to see what they missed. We can work on different schedules. (Its still more fun when we’re on at the same time).

- Campfire became the place to start asking “I wonder if the customer meant this or that”

- Campfire became the history of the project

- Campfire became the place to state what we were working on, and to trade pieces of work (“while I’m in there, I could take care of xxx”)

- Campfire became the place to let everybody know if one was working from home, or the office, or other

- Campfire became the place to paste code for a quick code review

- Campfire became the place to share cute jokes, and side technical things, as we got to know each other more

Note:There are many alternatives to campfire; that’s just what we used. IRC is how we could have done it in the old days, although it would take some setup to get the history to save.

We centralized our communication with the client via Basecamp

If we had a question to ask the client, we would use a basecamp discussion.

If we had a question to ask the client, we would use a basecamp discussion.

- We would create a discussion in basecamp, with a subject of “Question: xxxxx”, and the body being the question.

- We would “loop the client in” (specifying their email addresses) to the discussion. The client folks would get an email with the discussion thread. We would also add ourselves to the discussion.

- When the client responded, it got automatically added to basecamp, and we got notified by email.

- Once the question was answered, we would rename the subject to “Answered: xxxx”.

This gave us:

- A central place where we could see “what decisions had been made”

- An easy way to figure out “what has not yet been answered”

I would recommend this even for a single person working on a project, because of the second bullet point.

Once again, I’m sure there are alternatives that could be used here; although I’m not sure which ones would provide a way to log customer responses with such ease, while presenting the questions in a format that they would easily understand: email.

We collaborated on Google Documents for Digesting Requirements

Wen we received the equivalent of 15-20 pages of requirements to digest and estimate, with links to external resources (mockups) and other “stuff”, we floundered for about 2 hours. This was our (eventual) solution:

Wen we received the equivalent of 15-20 pages of requirements to digest and estimate, with links to external resources (mockups) and other “stuff”, we floundered for about 2 hours. This was our (eventual) solution:

- One of us pulled ALL the information into a SINGLE document, in Google Docs.

- We individually went through the document, adding comments at places that we had questions, highlighting things that we found interesting, etc.

- In reality, we did this together in almost- real time. Maybe not on the same page, but I could see my teammates putting in comments, and I could even respond to them, as we all looked at the document together.

- We then sat down face to face (although we could have done it over a phone call), and walked the document from top to bottom – everybody talking about the stuff they found interesting, how they thought it would affect the architecture, etc.

- We then created a spreadsheet, and started filling out the “details” of the work. We all collaborated on it as we were typing it up:

- By the time we left that document, I *knew* that we all agreed on the same way to do the work, and the architecture.

- The breakdown was more rigorous than I could have done myself (everybody’s rigor got UNION’ed together)

- During the collaboration process, we also figured out the how to divvy the work up. In this case, we decided to have one spearhead doing UI work, and another one coming up behind and connecting the UI to the underlying layers.

Google Docs is just what we had available to use at my company; One could also edit office documents in SkyDrive in a similar way. As long as you can see what everybody is doing in real time, this works.

We Used Planning Poker and Google Spreadsheets To Estimate

Once we had a work breakdown, we would use the planning poker process to figure out Low-end and High-end estimates on the individual items.

Once we had a work breakdown, we would use the planning poker process to figure out Low-end and High-end estimates on the individual items.

- We did this face to face with a real planning poker deck, once. (Thank you Microsoft)

- We did this online, in a google spreadsheet, once.

- We hid the numbers with white-on-white, and ran through the spreadsheet, and called out “done” when we were ready.

- We revealed all the numbers

- And then visited line by line to find any differences.

- Sadly, our estimates were way off – high – but I don’t think it was because of the process.

Beware, with multiple people, you will get a higher number. This is because those who are more sure and bid lower, give in to the folks who bid higher.

We started collaborating on Google Documents for Demo Notes

Our process involves a weekly demo to show the client where we are at. These demos were on Friday’s at 11am. Thus, usually on Thursday night (Depending on who got to a stopping point first), or on Friday morning, we would create a “Demo Notes” document

- We had a “team level” update which was usually put together by the “most chatty” of us. (me)

- Each of us had a section, which we would fill out – of the stuff we wanted to show the customer, that we had gotten done.

- We each had a different level of detail. That’s fine, and in time, comes to be something we celebrate.

- In each section, we had questions set aside like this, of stuff to ask the customer. It was clear that there was an answer missing, there was a spot to type in the answer.

- Question: Why did the chicken cross the road?

- Answer:

- We could have added a section on “pending questions”. Our client was excellent in responding, though, so we didn’t need to.

- At the end of the document, we added a “Decisions” section.

During the demo:

- We would openly share the demo notes document, using it as an agenda. Whoever’s screen was being shared with the client, the client would see the demo notes somewhere in there.

- As one person presented, if the client had feedback, or answers, another of us would take notes on their behalf.

- It gave all of us a job. With three of us on the call, we had two people listening in and ensuring that anything that was important got captured. We helped each other. We came to trust each other in a very “you’ve got my back” kind of way.

- When the client stated something that was even a bit complex, we would type it into the demo notes, highlight it, and ask: “Did I capture this correctly?”

- If decisions were made, we added them into the Decisions section.

Soon after the demo:

- We would scrub the document (a little bit), to clean up the mess that sometimes happens during the demo.

- We would export it as a PDF and email it to the client.

After the demo:

- If something was noted that we needed to take care of during the demo, we would add it as a “TODO: xxx” in the demo.

- When it was done, one could go back to those demo notes, and change it to “DONE: xxx”. (we didn’t all do this; maybe it was just me – but that’s the beauty of a live document, it can represent “now” rather than “at that time”).

We started sharing administrative tasks

In a past team environment, I made the mistake of “volunteering” to be the only person who did administrative tasks, like merges, status updates, etc. I was “team lead”, after all, wasn’t I supposed to do this? In doing so, under the guise of “protecting” my teammates, I signed up for all kinds of pain.

In this incarnation, we’re going with the philosophy “Everybody is capable of, and willing to do, everything”.

The first part, “capable”, meaning:

- If there’s something that one of us doesn’t know how to do, we’re willing to learn

- We don’t have to be awesome at it. As long as we can get it done well enough to move the project forwards.

The second part, “willing”, meaning:

- Nobody has to be saddled with exclusive pain.

- I know my teammates have my back. They are capable and willing to take on my pain.

So, we’ve ended up at this:

- We have a round robin order, for the weekly administrative tasks:

- Preparing and sending the Weekly Status Report

- Sending the Demo notes

- Updating the Project Burndown

Additionally, we started doing automatic merges from the parent branch into our branch. At first, we tried a “weekly” approach to it – but that ended up being WAY too much pain in a week. So, if we hit that again, we might be doing a “its your turn today” round robin approach instead.

We started using Google Docs for Status Report Documents

The person saddled with the status report, would create the document, and look through our time tracking system / the commit log to see what people did, and take a first stab at the contents of the report.

Then, they would invite the rest of us in to the report. We would update our individual sections, and add a “sign off” at the bottom of the document.

Once the document had everybody’s sign off, it got sent.

There’s More To Learn

Even with all of the above awesomeness, we have room to grow. My teammates will probably either groan or cackle with glee when they read this, but here’s what I’m thinking:

- There are other admin tasks we were lax on. These could be added in to the admin-task-monkey’s list of stuff to handle.

- We might start writing test cases together.

- We might start running each other’s test cases [more often]

- We might use a better way of breaking down the available work – in such a way that more than one person can get their feet wet in a feature.

- We’ve now invited the client into some of our collaboration – might learn some things there.

The underlying thing is that we were willing to do what was necessary to have a good team working environment, and we did it. And for that, I am grateful.

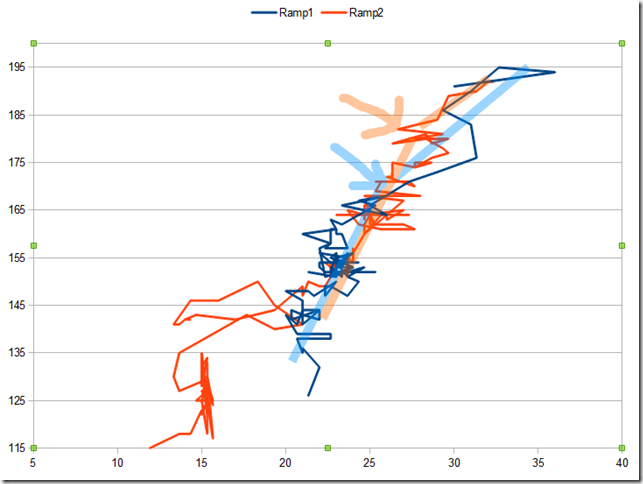

Here’s a slightly different view, courtesy of the free-compare tool at

Here’s a slightly different view, courtesy of the free-compare tool at

I’ve had the pleasure (and displeasure) of using both an

I’ve had the pleasure (and displeasure) of using both an  Wen we received the equivalent of 15-20 pages of requirements to digest and estimate, with links to external resources (mockups) and other “stuff”, we floundered for about 2 hours. This was our (eventual) solution:

Wen we received the equivalent of 15-20 pages of requirements to digest and estimate, with links to external resources (mockups) and other “stuff”, we floundered for about 2 hours. This was our (eventual) solution: Once we had a work breakdown, we would use the

Once we had a work breakdown, we would use the