I have had a project in my brain for a long time – I think I first mentioned it in 2013*

The problem is, it wouldn’t go away. It started to .. stink. In my mind, stale, and stinking, and in my mind. It had to be done.

The gist of the project is:

- Take several ways of driving to work

- Plot them out in 3D space with Time on the Z-Axis, in a virtual race as it were

- Should be able to see things like stoplights show up as vertical bars

Well, I got tired of thinking about it, decided to do it. I was spurred on by finding out that “jscad”, which I had known as openjscad.org, was now available as a node module — https://www.npmjs.com/package/jscad. Okay, lets do this!

* Here’s a first reference: Car Stats: Boogers! Foiled! 1/11/2013 … There’s a few other posts just prior where I had been plotting interesting things from car track data, using powershell.

The implementation details

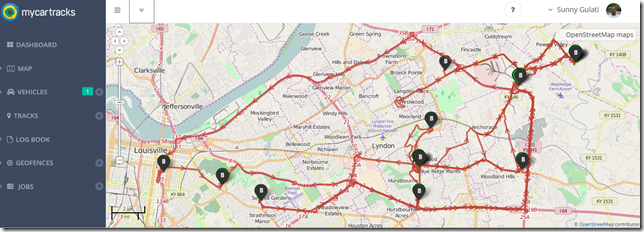

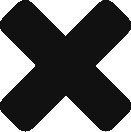

The above screenshot shows a sample output with some initial tracks, but .. they are the wrong tracks. I’ll be recording the correct tracks later.

- I created a “Base” for the sculpture by also drawing all the tracks along Z=0, but a little bit wider.

- I made it a bit more stable by putting a “Pillar” up to the other end of the track, so its supported.

Code at: https://github.com/sunnywiz/commute3d .. the version as of 4/29/2017 11:57pm should be runnable to give the above output.

It took me probably 12 hours spread over 3 days … here are some of the things I learned along the way:

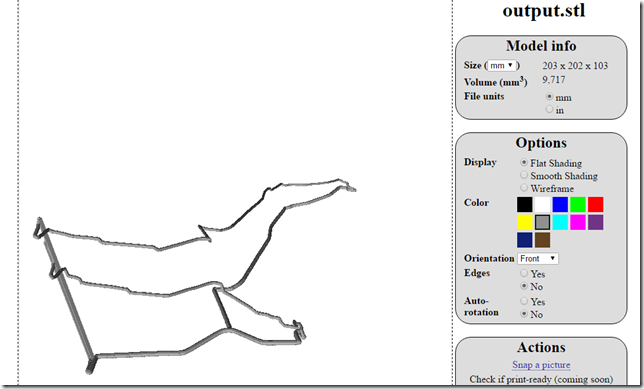

chocolatey install nodejs

I’ve taken to using chocolatey to install windows packages so I don’t have to go hunt things down on the internet. Most of my machines have it, its part of me setting up the machine.

I could totally install nodejs via chocolatey – and once installed, that gave me npm.

The command line, btw, would be: cinst –y nodejs

npm init

This bit will seem silly to people who’ve been using it for a while, but these were the walls I had to hit.

Can’t npm install a package (everybody says “run this command” – it doesnt work) … until you’ve first “npm init” started a project. I was thinking it was like CPAN where you added a package to a central repo and it was available, but nope, its more like “local depdency per project” more like nuget. Makes sense, my CPAN (Perl) was was from 1998 and the world has changed since then.

node is awesome

It feels like the days of Perl way back. Need something? There’s a library. Install it, use it, move on, solve the problem.

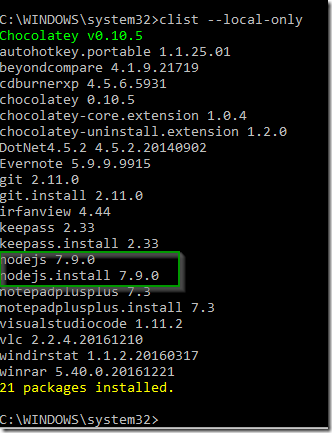

VSCode is awesome

Editing and debugging is a LOT easier if you use an appropriate developer environment. I tried vscode, and much to my surprise, I found it could:

- Edit with intellisense (I knew this, but didn’t realize how fast it was)

- Debug! Watch! Inspect! OMG! Very similar to Chrome Dev Tools.

- Integrated terminal

- Integrated git awareness and commits and pushes

- Split screen yadda yadda

- Very Nice.

nodejs asynchronous can be a learning curve

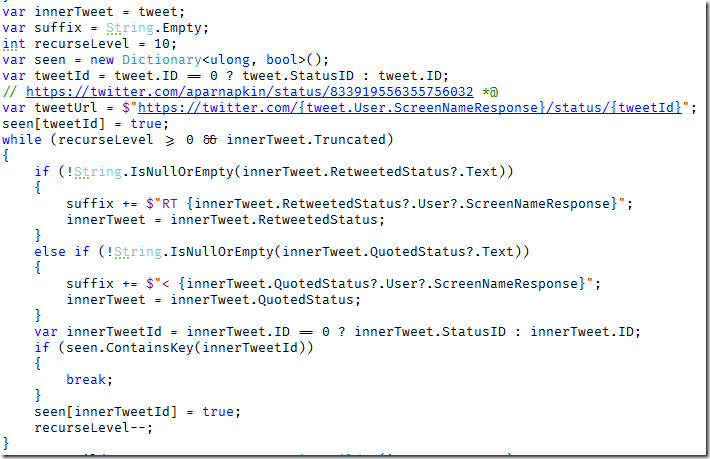

Take these two bits of code:

The first problem I had was that It would go to read all the tracks in … asynchronously. I didn’t have a way to wait till all the tracks were read before it continued on to doing something with the tracks.

That lead me to some confusing code using “Promises.map”. Then I found out that, most folks do const Promises = require(‘bluebird’)! There’s a library that makes things easier.

So, if you’re not aware, and explaining to my future self:

- Call readtracks .. its an async call

- It returns a promise.

- the implementation of readtracks eventually either calls resolve or reject (in my case, resolve).

- At that point, the promise’s “then” clause gets run.

- the glob … function (er, files) is a “normal” nodejs callback strategy at the level below promises .. doesn’t rely on promises, its doing a directly specified callback.

- the bluebird.map() basically does a foreach() on files, for each one, runs filename through a function, takes the result of that function, sticks it in an array. It does this in a promise…

- … which when its done with all the things, then calls resolve

- the text fileName => readTrack(fileName) is the same as function(fileName) { return readTrack(fileName) }.

- when you have promises, there’s a way of writing them out using “async” and “await”. I didn’t try that.

- the .then(resolve) is the same as .then(function( x ) { resolve(x); } )

The other beginner mistake that I kept making – I would forget to say “new” when I meant to create a new object. ![]()

So I want to join two points in 3d space with a beam

I spent an embarrassingly long time trying to figure out how to take a cube, and scale it and rotate it and transform it so it acts as a beam between (x1,y1,z1) and (x2,y2,z2).

The answer is:

The resolution:4 gives it four edges.

I need to view .STL files on my machine

3D Builder is a windows program that would let me do it, but it hung sometimes.

The easier route was to drag the model over to http://www.viewstl.com/ (which is what I did for the screenshot with this blog post).

Netfabb got Bought Out

Once upon a time, I found some really nice software .. which I thought I liked enough to give them real money. Looking back, I cant find a record of giving them money… I guess I intended to at one point.

In the time that I haven’t been doing 3D printing, apparently AutoDesk bought them out, and.. the new cost is $125 PER MONTH. Uh, no. While researching for this blog post, I did manage to find a binary of the old netfabb Studio Basic, so I snagged that. I saved my emails with the keys that I had used to activate it in the past, and those seemed to work, so Yay!

CSG Unioning of 1000+ things takes a long time

I also discovered console.time() while benching this.

When running the program, I end up with a bunch of CSG’s, but they’re all intersecting. I need to merge them together to get a printable thing.

That code, when run for a larger print (100 tracks), took all of 18 hours to do the merging. That was … too much. So, I tried to write a program to make the merging easier.

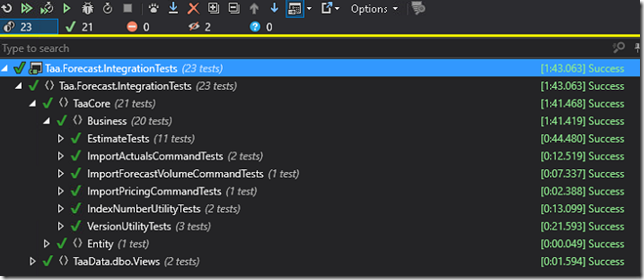

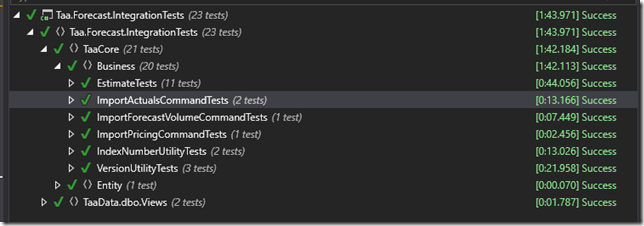

1029 items (spheres, cylinders, boxes) unioned together, NO DEBUGGER, yielding a CSG with 11935 polygons:

- If I union them in a for loop one after the other

- 34 minutes!

- It gets slower over time.

- there’s a O(N^2) probably that happens under the hood – it has to re-consider the same shape over and over as it tries to add new shapes.

- If I union them using a binary tree tactic:

- 42 seconds!!!

- The actual numbers:

- There’s probably libraries out there that specializing in union arrays of CSGs, rather than two objects at a time. Maybe I’ll upgrade at some point.

Note that if I upload the non-unioned version to somebody like sculpteo, they have an “auto-fixer” tool that fixes things pretty quickly, this is not absolutely necessary. Just .. feels mathematically clean to do it.

I did try to write the above fancyUnion using promises, but .. didn’t help. Node still only uses one thread, it did not branch out to multithreading.

3 Major sources of Documentation for jscad / openjscad / csg:

- Low level docs for csg.js, possibly outdated, possibly auto-generated and very up to date: http://evanw.github.io/csg.js/docs/

- The Next best one to read is this one: http://joostn.github.io/OpenJsCad/

- And then there’s this one, fairly similar to previous: https://en.wikibooks.org/wiki/OpenJSCAD_User_Guide

- And then there’s opening csg.js as installed by npm directly, and looking at the code – for when the arguments you pass in don’t seem to work.

- Example: sphere. Its either center:true, or center:[x.y.z], depending.

Conclusion

Code is written!

I’m going to hopefully get some tracks recorded over this week.

I’ll make another post of how the printing process goes with the real sculpture.

Current project uses sqlite3 for local storage of stuff for various reasons. This was our first time working with it. We’ve learned a few things that are not obvious.. coming from a SqlServer world —

Current project uses sqlite3 for local storage of stuff for various reasons. This was our first time working with it. We’ve learned a few things that are not obvious.. coming from a SqlServer world —