My wrists have been hurting lately.. especially the right one. Wife thinks I have carpel tunnel syndrome, she might be right. I already have the ergonomic keyboard, and a trackball; I can use a mouse in either hand, with either button configuration. However, when working with code, there’s definitely a “switch hand to arrow keys” and “switch back” repetitive thing that happens. (at least for me). So, this journey to save on keystrokes and wrist movements.

Step 1: Try Not to Use the Mouse

I started by putting the mouse very far away from me. This forced me to try to find keyboard shortcuts for most of the things I was trying to do – especially switching windows. Here are some of the ones I use now; most of these are not the default keyboard combinations, but rather the secondary keyboard combinations, which I was left with after vsvim got installed.

| Shft-Alt-L or Ctrl-Alt-L | Solution Explorer |

| F5 | Build + Debug |

| F6 or Ctrl-Shift-B | Build |

| Ctrl-Alt-O | Ouptut Window |

| Ctrl-R Ctrl-R | Resharper Refactor |

| Alt-~ | Navigate to related symbol |

| Ctrl-K C | Show Pending Changes |

| Ctrl-T, Ctrl-Shift-T | Resharper Navigate to Class / File |

| Alt-\ | Go to Member |

| Ctrl-Alt-F | Show File Structure |

| Alt-E O L | Turn off auto-collapsed stuff |

I also assumed a layout where I have a bunch of code windows, and all other windows are either shoved over on the right or detached and on another monitor. No more messing with split windows all over the place. By using a keyboard shortcut, wherever the window is, it becomes visible. I don’t hunt around in tabs anymore.

Step 2: VsVim

History

I first learned vi in 1983, on a Vt100 Terminal emulator connected via a 150 baud modem to the unix server provided by Iowa State University’s Computer Science department. (I was still in high school, I was visiting my brother who was a graduate student at the time). There was some kind of vi-tutor program that I went through. It was also much better than edlin and ed, which were my other options at the time.

Anti-Religious-Statement: I used it religiously till 1990, when learning LISP, I also learned to love emacs. Yes, I stayed in emacs most of the time, starting shell windows as needed.

I maintained a proficiency in both vi and emacs till 2001, when I got assimilated by .Net and left my Unix roots behind.

And Now

Having had a history with it, I decided to try vsvim and see how quickly things came back to me.

The first thing I noticed is that every other program I used, whenever I mis typ hhhhcwtyped something, I’d start throwing out gibberish involving hhhjjjjxdw vi movement commands. And pressing ESC a lot. I (am still having to) to try to train my eyes to only use vi commands when I saw the flashing block yellow cursor that I configured it to be.

I also had to un-bind a few things – for example, vi’s Ctrl-R is a lot less useful to me than Resharper’s Ctrl-R Refactorhhhhhhhhhhhhhhh I did it again. vi’s Ctrl-R “redo” I could just do :redo instead.

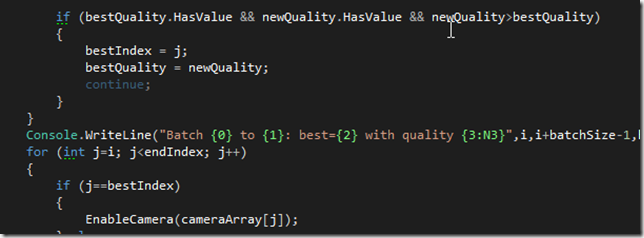

And where am I now? I still need to think about it a bit.. but, for example, recently I changed some code from being a static Foo.DoSomething() to a IFoo.DoSomething(), and I had to inject the class in a bunch (10+?) of constructors. The key sequences went something like this. (R# in Red, vsvim command in blue)

| ALT-\ ctor ENTER | Jump to the constructor in the file (R#) |

| /) ENTER | Search forward for “)” and put cursor there (/ and ? go across lines, fF are in current line only) |

| i, IFoo foo ESC | Insert “, IFoo foo” |

| F, | Go back to the comma |

| v lllllllllll “ay | Enter visual select mode, highlight going right, to buffer A, yank (copy); cursor back at , |

| /foo ENTER | jump forward to foo |

| Alt-ENTER ENTER | Use R# “insert field for this thingy in the constructor” thingy |

| Ctrl-T yafvm ENTER | Use R# Go to Class, looking for YetAnotherFooViewModel (most of the common things I work with have a very fast acronym. For example “BasePortfolioEditorViewModel” is “bpevm”. I can also use regexp stuff) |

| Alt-\ ctor ENTER | Jump to constructor |

| /) ENTER | Go to closing brace |

| “aP | paste from buffer A before cursor |

If this sounds like complete gibberish … yes it is. But here’s the thing:

- I am talking aweZUM s3krit c0dez with my computer!

- My fingers are not leaving the home position on the keyboard. Not even for arrow keys.

- By storing snippets of text into paste buffers (a-z, 0-9, etc), I can avoid typing those things again, which is very useful.

- If I plan ahead a bit I can save a lot of keystrokes trying to get somewhere in a file.

- Once I enter insert mode, Its just like normal – can still use arrow keys to move around, shift-arrow to select, etc.

It is geeky, nerdy, experimental, and it might be helping my wrists a bit. 1 week so far, still going okay.

another trick I use: variable names like “a”, “b”, “c” .. and then I Ctrl-R Ctrl-R rename them to something better later.

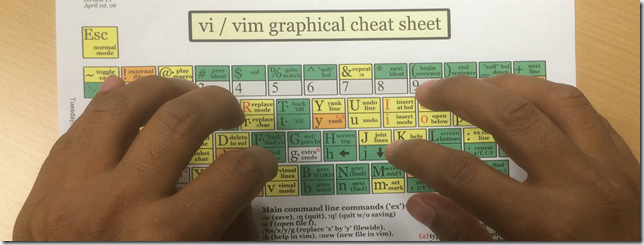

I would not recommend trying to learn vi without a vi-tutor type program