Since before I got my 3D printer, I’ve wanted to make a scale replica of my house. I tried doing it with Legos once – it was cost prohibitive.

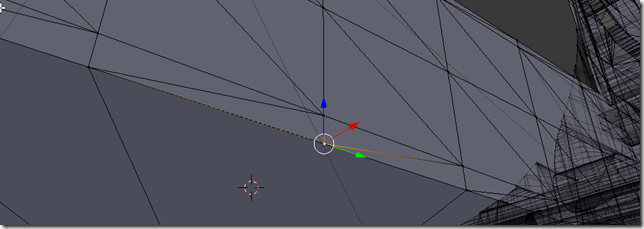

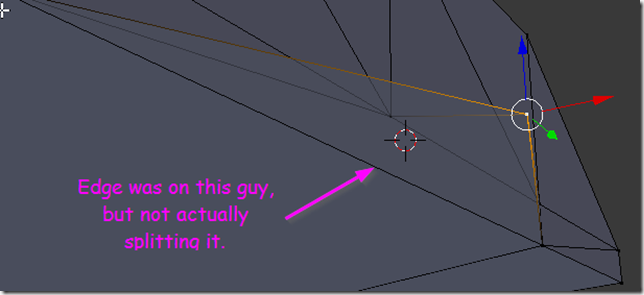

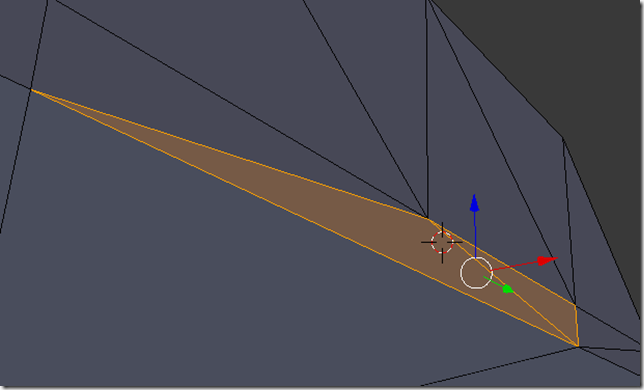

I came up with a workflow where I drew out the entire house in SweetHome3D, and then exported that, but I ran into manifold problems and stuff like that.

So I did one of the floors in Sketchup. However, that was a painfully task – and the resulting model was still too big (I want 1:24 or 1:36 scale). I’d have to slice up the model to print out individual pieces, which means I wanted to cut them in such a way that they joined together with some kind of self-aligning joint.

I was about to try it again, but the sheer amount of detail that I had to go through kept holding me back. I wanted a formula.

A recent blog post brought my attention to OpenJSCAD. and an Idea formed in my head:

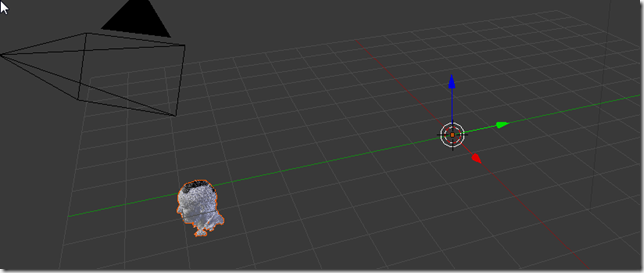

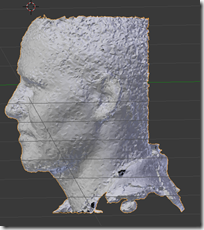

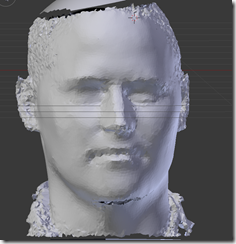

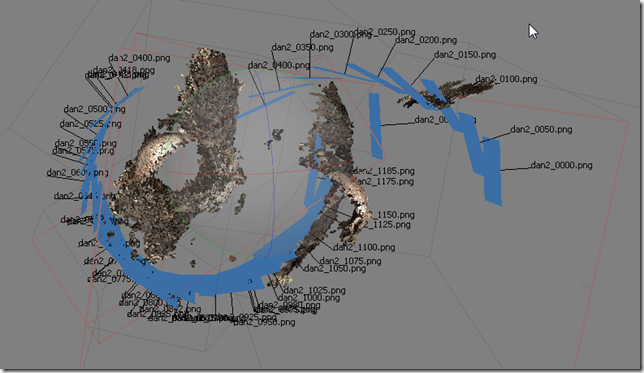

| Convert THIS: |  |

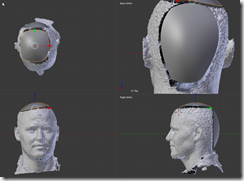

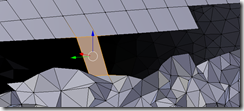

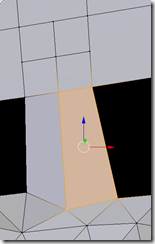

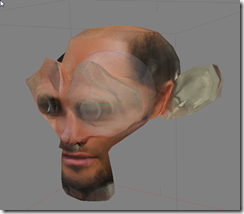

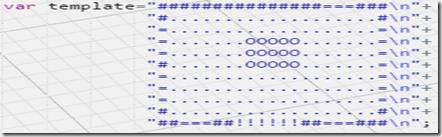

| Into THIS: |  |

I had tried to do something similar in OpenSCAD before, however, because that language doesn’t have procedural elements, I ran into all kinds of problems. Fresh new start!

So I set about to do it.

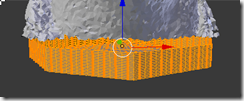

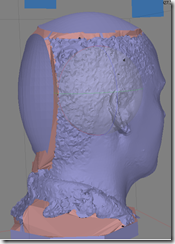

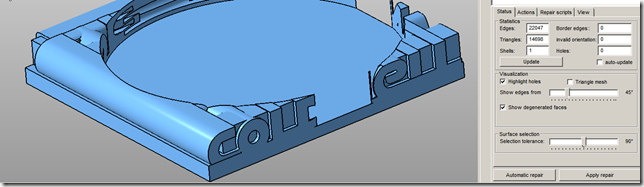

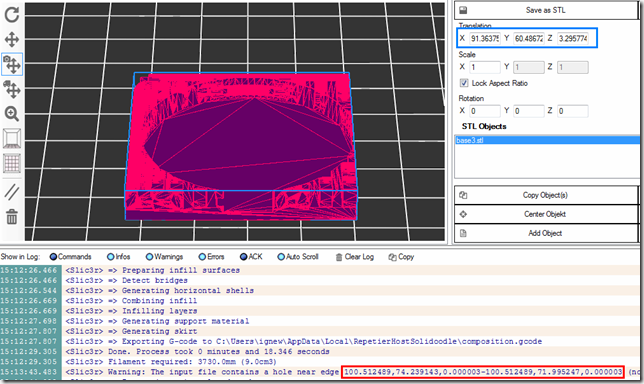

As you can see by this screenshot, I succeeded.

The code is here: https://github.com/sunnywiz/housejscad. It took me about 2 hours. You can see the commit log, I committed every time I figured even a small piece of the puzzle out.

UPDATE 2/1/2015: the code as of this blog post is tagged with “Post1”, ie https://github.com/sunnywiz/housejscad/releases/tag/Post1 — the code has since evolved. Another blog post is in the works. I guess I could “release to main” every time I do a blog post. Heh.

The Code

- Provide a translation of map character to 1x1x10 primitive anchored at 0,0,0

- convert the template into a 2D Array, so that I can look for chunks of repeated stuff.

- Walk the pattern, looking for chunks. Rather than get fancy, I made a list of all chunk sizes from 6×6 down to 2×1, and check for each one at a time.

- There are more efficient ways to do this, but IAGNI.

- If a chunk is found, generate the primitive for that chunk, scale it up, and add it to the list. “Consume” the characters which we just generated.

- When all done, union everything together.

Notes about the Code

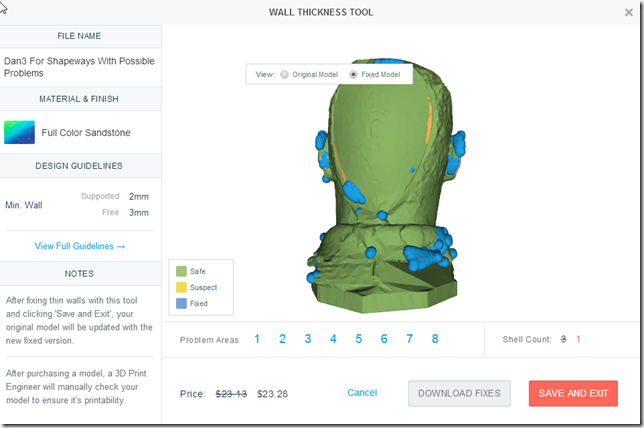

- The resulting file is not manifold, however, NetFabb fixes that pretty easily and reliably.

- The chunking is necessary if I want to represent steps in an area. Otherwise, I didn’t need it.

- You can define any mapping you want .. from a character to a function that returns a CSG.

- Could probably use this to generate dungeon levels pretty easily. Or, maybe take a game of NetHack and generate out the level? Coolness!

Where would it go from here

- Lay out an actual template of (part of) the house, and fine tune it from there.

- Probably involve adding “and I want the result to be exactly 150 by 145mm” type scaling.

- The functions will probably start taking arguments like (dx,dy) => so that the function can draw something intelligent for an area that is dx by dy in size.

- I just noticed, the output is mirrored due to axes being different between R,C and Y,X

- Preferably, I’d like to create a object / class that does this work, rather than the current style of coding. IAGNI at the moment. Then, maybe running in node, I could take the different floors and convert then into objects, and then do further manipulation on them..

- Like slice them into top and bottom pieces. Windows and doors print a lot better upside down – no support material necessary.

- Would also need to slice them into horizontal pieces. My build platform is limited to 6” square.

- I live in a very 90-degree-angle house. Thus, this kind of solution would work for me. Sorry if you live in a circular, or slightly angled, house, this solution is not for you. Buy me a house, and I’ll build you a solutiion. 😛

- Seriously thinking about this. I’d probably have a template of “points”, and then a language of “Draw a wall from A to D to E”; and then “place a door on wall from A to D at the intersection of F” or something like that.

A fun night of short and sweet coding. I had to look up a lot of javascript primitives, mostly around arrays of arrays, and checking for undefined.

Some day I’ll get that “doll” house printed. Then I can make scale sizes of all my furniture from Lego’s! Fun fun.